In[27]:

filename_train_images = r’D:\vision\mnist\train-images.idx3-ubyte’

filename_train_labels = r’D:\vision\mnist\train-labels.idx1-ubyte’

filename_test_images = r’D:\vision\mnist\t10k-images.idx3-ubyte’

filename_test_labels = r’D:\vision\mnist\t10k-labels.idx1-ubyte’

train_images=load_images(filename_train_images)

train_labels=load_labels(filename_train_labels)

test_images=load_images(filename_test_images)

test_labels=load_labels(filename_test_labels)

In[28]:

fig=plt.figure(figsize=(8,8))

fig.subplots_adjust(left=0,right=1,bottom=0,top=1,hspace=0.05,wspace=0.05)

for i in range(30):

images = np.reshape(train_images[i], [28,28])

ax=fig.add_subplot(6,5,i+1,xticks=,yticks=)

ax.imshow(images,cmap=plt.cm.binary,interpolation=‘nearest’)

ax.text(0,7,str(train_labels[i]))

plt.show()

In[44]:

x_train=train_images

y_train=train_labels

x_valid=test_images

y_valid=test_labels

In[45]:

print(x_train.shape)

In[46]:

from matplotlib import pyplot

pyplot.imshow(x_train[0].reshape((28,28)),cmap=‘gray’)

In[93]:

import torch

x_train,y_train,x_valid,y_valid=map(torch.tensor,(x_train,y_train,x_valid,y_valid))

n,c =x_train.shape

x_train,x_train.shape,y_train.min(),y_train.max()

x_train=torch.tensor(x_train,dtype=torch.float)

y_train=y_train.long()

x_valid=torch.tensor(x_valid,dtype=torch.float)

y_valid=y_valid.long()

print(x_train,y_train)

print(‘x_train’,x_train.shape)

print(y_train.min(),y_train.max())

print(type(y_valid.dtype))

function method

有待学习的参数–nn.module

其他情况–nn.function

In[74]:

import torch.nn.functional as F

loss_func=F.cross_entropy

def model(xb):

return xb.mm(weights)+bias

In[90]:

bs=64

xb=x_train[0:bs]

yb=y_train[0:bs]

weights=torch.randn([784,10],dtype=torch.float,requires_grad=True)

bias=torch.zeros(10,requires_grad=True)

print(loss_func(model(xb),yb))

In[80]:

from torch import nn

class Mnist_NN(nn.Module):

def init(self):

super().init()

self.hidden1=nn.Linear(784,128)

self.hidden2=nn.Linear(128,256)

self.out=nn.Linear(256,10)

def forward(self,x):

x=F.relu(self.hidden1(x))

x=F.relu(self.hidden2(x))

x=self.out(x)

return x

In[81]:

net=Mnist_NN()

print(net)

In[82]:

for name,parameter in net.named_parameters():

print(name,parameter,parameter.size())

In[83]:

#取数据

from torch.utils.data import TensorDataset

from torch.utils.data import DataLoader

train_ds=TensorDataset(x_train,y_train)

train_dl=DataLoader(train_ds,batch_size=bs,shuffle=True)

valid_ds=TensorDataset(x_valid,y_valid)

valid_dl=DataLoader(valid_ds,batch_size=bs*2)

In[84]:

def get_data(train_ds,valid_ds,bs):

return(DataLoader(train_ds,batch_size=bs,shuffle=True),

DataLoader(valid_ds,batch_size=bs * 2)

)

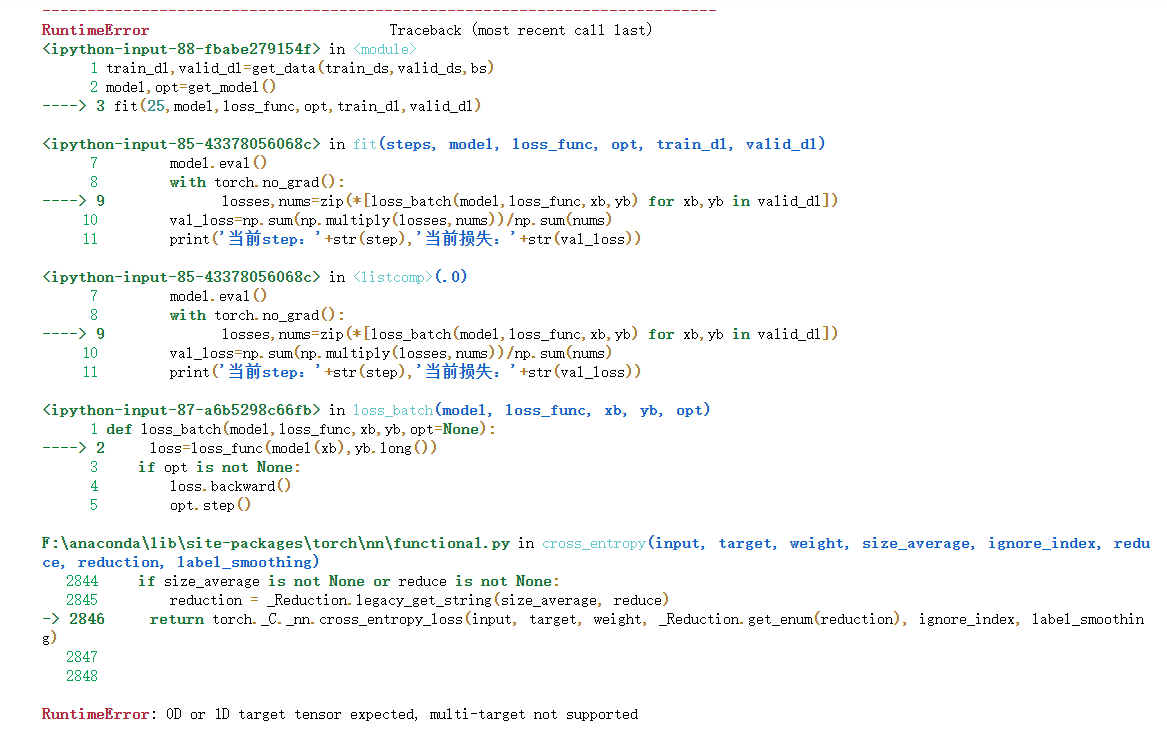

In[85]:

import numpy as np

def fit(steps,model,loss_func,opt,train_dl,valid_dl):

for step in range(steps):

model.train()

for xb,yb in train_dl:

loss_batch(model,loss_func,xb,yb,opt)

model.eval()

with torch.no_grad():

losses,nums=zip(*[loss_batch(model,loss_func,xb,yb) for xb,yb in valid_dl])

val_loss=np.sum(np.multiply(losses,nums))/np.sum(nums)

print(‘当前step:’+str(step),‘当前损失:’+str(val_loss))

In[86]:

from torch import optim

def get_model():

model=Mnist_NN()

return model,optim.SGD(model.parameters(),lr=0.001)

In[87]:

def loss_batch(model,loss_func,xb,yb,opt=None):

loss=loss_func(model(xb),yb.long())

if opt is not None:

loss.backward()

opt.step()

opt.zero_grad()

return loss.item(),len(xb)

In[88]:

train_dl,valid_dl=get_data(train_ds,valid_ds,bs)

model,opt=get_model()

fit(25,model,loss_func,opt,train_dl,valid_dl)