DataLoader produces an error.

The code for DataLoader is below:

class PandaDataset(Dataset):

def __init__(self, path, train, transform):

self.path = path

self.names = list(pd.read_csv(train).image_id)

self.labels = list(pd.read_csv(train).isup_grade)

self.transform = transform

def __len__(self):

return len(self.names)

def __getitem__(self, idx):

label = self.labels[idx]

name = self.names[idx]

img = skimage.io.MultiImage(os.path.join(self.path,name+'.tiff'))[-1]

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

list_of_images = []

tiles = tile(img)

for img in tiles:

if x:

augmented = get_transforms(data='valid')(image=img)

img = augmented['image']

list_of_images.append(img)

new_tiles=torch.stack(list_of_images, axis=0)

return new_tiles, torch.Tensor(label)

when I tried to debug it with code below outside of DataLoader it works fine.

img = skimage.io.MultiImage(os.path.join(train_images,'0005f7aaab2800f6170c399693a96917'+'.tiff'))[-1]

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

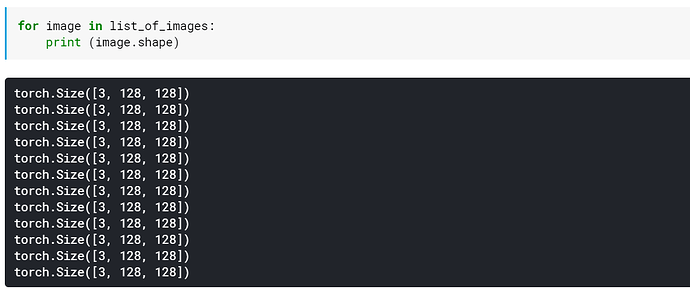

list_of_images = []

tiles = tile(img)

for img in tiles:

if x:

augmented = get_transforms(data='train')(image=img)

img = augmented['image']

list_of_images.append(img)

new_tiles=torch.stack(list_of_images, axis=0)

def tile simply crops and image to 12 images and append them to numpy array.

Thanks.