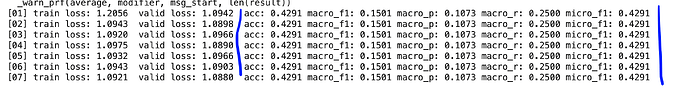

I am working on a classification problem using pre-trained VGG16 on imagenet. The training loss and Val loss are changing in each epoch, but the evaluation metrics like accuracy, F1-score, precision, recall remain exactly static throughout the epochs as can be seen in the image attached

. How can I make sure that evaluation metrics are changing according, as I have to choose the checkpoint based on the best F1-score for the test set. Below is the code for validation and metrics calculation:def validate():

model.eval()

valid_losses = []

y_true, y_pred = [], []

with torch.no_grad():

for batch, target in valid_loader:

# move data to the device

batch = batch.to(device)

target = target.to(device)

# make predictions

predictions = model(batch)

# calculate loss

loss = criterion(predictions, target)

# track losses and predictions

valid_losses.append(float(loss.item()))

#print(valid_losses)

y_true.extend(target.cpu().numpy())

y_pred.extend(predictions.argmax(dim=1).cpu().numpy())

y_true = np.array(y_true)

y_pred = np.array(y_pred)

valid_losses = np.array(valid_losses)

# calculate the mean validation loss

valid_loss = valid_losses.mean()

# return valid_loss, valid_accuracy, macro_f1, macro_p, macro_r, micro_f1, cm

return valid_loss, y_true, y_pred

def evaluation_analysis(y_true, y_pred):

# calculate validation accuracy from y_true and y_pred

valid_accuracy = np.mean(y_pred == y_true)

# macro_average

macro_f1 = metrics.f1_score(y_true, y_pred, average='macro')

macro_p = metrics.precision_score(y_true, y_pred, average='macro')

macro_r = metrics.recall_score(y_true, y_pred, average='macro')

# micro average

micro_f1 = metrics.f1_score(y_true, y_pred, average='micro')

# confusion matrix

cm = metrics.confusion_matrix(y_true, y_pred)

return acc_score, macro_f1, macro_p, macro_r, micro_f1, cm```