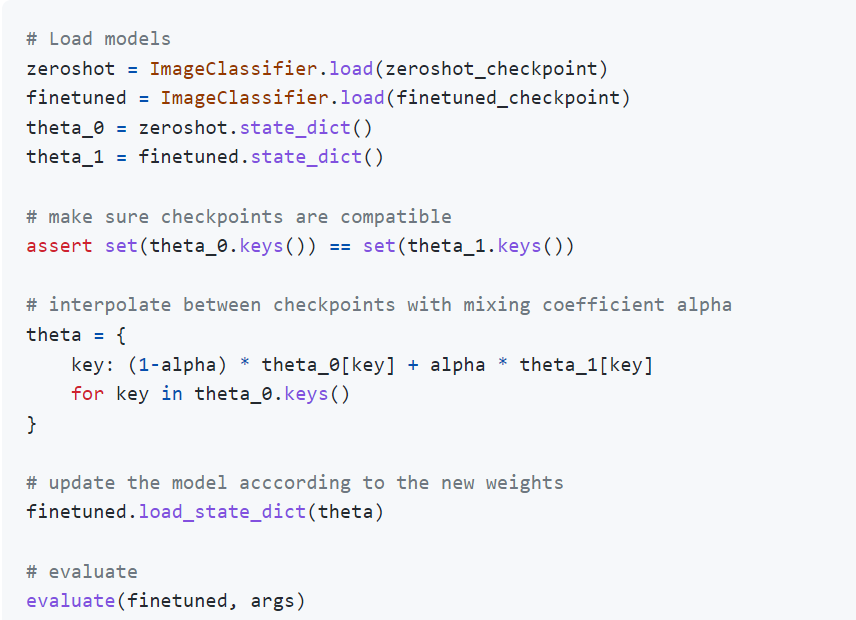

I am trying to add two model checkpoints [transformer] of different no. of layers, hidden size, attention heads and intermediate size. Here is a sample code [screenshot attached] where I am adding two model ckpts trained on different data. Could somebody please help me with this issue I am unable to figure out this by myself.