thanks for your reply。this is a module of resnet18. it has converted to int8 mode

import torch

from torch.nn import *

class FxModule(torch.nn.Module):

def __init__(self):

super().__init__()

self.conv1 = torch.load(r'fx\conv1.pt') # Module( (0): QuantizedConv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU() )

self.layer1 = torch.load(r'fx\layer1.pt') # Module( (0): Module( (left): Module( (0): QuantizedConv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Module( (left): Module( (0): QuantizedConv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) )

self.layer2 = torch.load(r'fx\layer2.pt') # Module( (0): Module( (left): Module( (0): QuantizedConv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (shortcut): Module( (0): QuantizedConv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), scale=1.0, zero_point=0, bias=False) (1): QuantizedBatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Module( (left): Module( (0): QuantizedConv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) )

self.layer3 = torch.load(r'fx\layer3.pt') # Module( (0): Module( (left): Module( (0): QuantizedConv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (shortcut): Module( (0): QuantizedConv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), scale=1.0, zero_point=0, bias=False) (1): QuantizedBatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Module( (left): Module( (0): QuantizedConv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) )

self.layer4 = torch.load(r'fx\layer4.pt') # Module( (0): Module( (left): Module( (0): QuantizedConv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) (shortcut): Module( (0): QuantizedConv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), scale=1.0, zero_point=0, bias=False) (1): QuantizedBatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Module( (left): Module( (0): QuantizedConv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (1): QuantizedBatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): QuantizedConv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), bias=False) (4): QuantizedBatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) )

self.avgpool = torch.load(r'fx\avgpool.pt') # AdaptiveAvgPool2d(output_size=(1, 1))

self.fc = torch.load(r'fx\fc.pt') # QuantizedLinear(in_features=512, out_features=10, scale=1.0, zero_point=0, qscheme=torch.per_channel_affine)

self.register_buffer('conv1_0_input_scale_0', torch.empty([]))

self.register_buffer('conv1_0_input_zero_point_0', torch.empty([]))

self.register_buffer('layer1_0_relu_scale_0', torch.empty([]))

self.register_buffer('layer1_0_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer1_1_relu_scale_0', torch.empty([]))

self.register_buffer('layer1_1_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer2_0_relu_scale_0', torch.empty([]))

self.register_buffer('layer2_0_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer2_1_relu_scale_0', torch.empty([]))

self.register_buffer('layer2_1_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer3_0_relu_scale_0', torch.empty([]))

self.register_buffer('layer3_0_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer3_1_relu_scale_0', torch.empty([]))

self.register_buffer('layer3_1_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer4_0_relu_scale_0', torch.empty([]))

self.register_buffer('layer4_0_relu_zero_point_0', torch.empty([]))

self.register_buffer('layer4_1_relu_scale_0', torch.empty([]))

self.register_buffer('layer4_1_relu_zero_point_0', torch.empty([]))

self.load_state_dict(torch.load(r'fx/state_dict.pt'))

def forward(self, x):

conv1_0_input_scale_0 = self.conv1_0_input_scale_0

conv1_0_input_zero_point_0 = self.conv1_0_input_zero_point_0

conv1_0_input_dtype_0 = self.conv1_0_input_dtype_0

quantize_per_tensor_1 = torch.quantize_per_tensor(x, conv1_0_input_scale_0, conv1_0_input_zero_point_0, conv1_0_input_dtype_0); x = conv1_0_input_scale_0 = conv1_0_input_zero_point_0 = conv1_0_input_dtype_0 = None

conv1_0 = getattr(self.conv1, "0")(quantize_per_tensor_1); quantize_per_tensor_1 = None

conv1_1 = getattr(self.conv1, "1")(conv1_0); conv1_0 = None

conv1_2 = getattr(self.conv1, "2")(conv1_1); conv1_1 = None

layer1_0_left_0 = getattr(getattr(self.layer1, "0").left, "0")(conv1_2)

layer1_0_left_1 = getattr(getattr(self.layer1, "0").left, "1")(layer1_0_left_0); layer1_0_left_0 = None

layer1_0_left_2 = getattr(getattr(self.layer1, "0").left, "2")(layer1_0_left_1); layer1_0_left_1 = None

layer1_0_left_3 = getattr(getattr(self.layer1, "0").left, "3")(layer1_0_left_2); layer1_0_left_2 = None

layer1_0_left_4 = getattr(getattr(self.layer1, "0").left, "4")(layer1_0_left_3); layer1_0_left_3 = None

layer1_0_relu_scale_0 = self.layer1_0_relu_scale_0

layer1_0_relu_zero_point_0 = self.layer1_0_relu_zero_point_0

add_relu = torch.ops.quantized.add_relu(layer1_0_left_4, conv1_2, layer1_0_relu_scale_0, layer1_0_relu_zero_point_0); layer1_0_left_4 = conv1_2 = layer1_0_relu_scale_0 = layer1_0_relu_zero_point_0 = None

layer1_1_left_0 = getattr(getattr(self.layer1, "1").left, "0")(add_relu)

layer1_1_left_1 = getattr(getattr(self.layer1, "1").left, "1")(layer1_1_left_0); layer1_1_left_0 = None

layer1_1_left_2 = getattr(getattr(self.layer1, "1").left, "2")(layer1_1_left_1); layer1_1_left_1 = None

layer1_1_left_3 = getattr(getattr(self.layer1, "1").left, "3")(layer1_1_left_2); layer1_1_left_2 = None

layer1_1_left_4 = getattr(getattr(self.layer1, "1").left, "4")(layer1_1_left_3); layer1_1_left_3 = None

layer1_1_relu_scale_0 = self.layer1_1_relu_scale_0

layer1_1_relu_zero_point_0 = self.layer1_1_relu_zero_point_0

add_relu_1 = torch.ops.quantized.add_relu(layer1_1_left_4, add_relu, layer1_1_relu_scale_0, layer1_1_relu_zero_point_0); layer1_1_left_4 = add_relu = layer1_1_relu_scale_0 = layer1_1_relu_zero_point_0 = None

layer2_0_left_0 = getattr(getattr(self.layer2, "0").left, "0")(add_relu_1)

layer2_0_left_1 = getattr(getattr(self.layer2, "0").left, "1")(layer2_0_left_0); layer2_0_left_0 = None

layer2_0_left_2 = getattr(getattr(self.layer2, "0").left, "2")(layer2_0_left_1); layer2_0_left_1 = None

layer2_0_left_3 = getattr(getattr(self.layer2, "0").left, "3")(layer2_0_left_2); layer2_0_left_2 = None

layer2_0_left_4 = getattr(getattr(self.layer2, "0").left, "4")(layer2_0_left_3); layer2_0_left_3 = None

layer2_0_shortcut_0 = getattr(getattr(self.layer2, "0").shortcut, "0")(add_relu_1); add_relu_1 = None

layer2_0_shortcut_1 = getattr(getattr(self.layer2, "0").shortcut, "1")(layer2_0_shortcut_0); layer2_0_shortcut_0 = None

layer2_0_relu_scale_0 = self.layer2_0_relu_scale_0

layer2_0_relu_zero_point_0 = self.layer2_0_relu_zero_point_0

add_relu_2 = torch.ops.quantized.add_relu(layer2_0_left_4, layer2_0_shortcut_1, layer2_0_relu_scale_0, layer2_0_relu_zero_point_0); layer2_0_left_4 = layer2_0_shortcut_1 = layer2_0_relu_scale_0 = layer2_0_relu_zero_point_0 = None

layer2_1_left_0 = getattr(getattr(self.layer2, "1").left, "0")(add_relu_2)

layer2_1_left_1 = getattr(getattr(self.layer2, "1").left, "1")(layer2_1_left_0); layer2_1_left_0 = None

layer2_1_left_2 = getattr(getattr(self.layer2, "1").left, "2")(layer2_1_left_1); layer2_1_left_1 = None

layer2_1_left_3 = getattr(getattr(self.layer2, "1").left, "3")(layer2_1_left_2); layer2_1_left_2 = None

layer2_1_left_4 = getattr(getattr(self.layer2, "1").left, "4")(layer2_1_left_3); layer2_1_left_3 = None

layer2_1_relu_scale_0 = self.layer2_1_relu_scale_0

layer2_1_relu_zero_point_0 = self.layer2_1_relu_zero_point_0

add_relu_3 = torch.ops.quantized.add_relu(layer2_1_left_4, add_relu_2, layer2_1_relu_scale_0, layer2_1_relu_zero_point_0); layer2_1_left_4 = add_relu_2 = layer2_1_relu_scale_0 = layer2_1_relu_zero_point_0 = None

layer3_0_left_0 = getattr(getattr(self.layer3, "0").left, "0")(add_relu_3)

layer3_0_left_1 = getattr(getattr(self.layer3, "0").left, "1")(layer3_0_left_0); layer3_0_left_0 = None

layer3_0_left_2 = getattr(getattr(self.layer3, "0").left, "2")(layer3_0_left_1); layer3_0_left_1 = None

layer3_0_left_3 = getattr(getattr(self.layer3, "0").left, "3")(layer3_0_left_2); layer3_0_left_2 = None

layer3_0_left_4 = getattr(getattr(self.layer3, "0").left, "4")(layer3_0_left_3); layer3_0_left_3 = None

layer3_0_shortcut_0 = getattr(getattr(self.layer3, "0").shortcut, "0")(add_relu_3); add_relu_3 = None

layer3_0_shortcut_1 = getattr(getattr(self.layer3, "0").shortcut, "1")(layer3_0_shortcut_0); layer3_0_shortcut_0 = None

layer3_0_relu_scale_0 = self.layer3_0_relu_scale_0

layer3_0_relu_zero_point_0 = self.layer3_0_relu_zero_point_0

add_relu_4 = torch.ops.quantized.add_relu(layer3_0_left_4, layer3_0_shortcut_1, layer3_0_relu_scale_0, layer3_0_relu_zero_point_0); layer3_0_left_4 = layer3_0_shortcut_1 = layer3_0_relu_scale_0 = layer3_0_relu_zero_point_0 = None

layer3_1_left_0 = getattr(getattr(self.layer3, "1").left, "0")(add_relu_4)

layer3_1_left_1 = getattr(getattr(self.layer3, "1").left, "1")(layer3_1_left_0); layer3_1_left_0 = None

layer3_1_left_2 = getattr(getattr(self.layer3, "1").left, "2")(layer3_1_left_1); layer3_1_left_1 = None

layer3_1_left_3 = getattr(getattr(self.layer3, "1").left, "3")(layer3_1_left_2); layer3_1_left_2 = None

layer3_1_left_4 = getattr(getattr(self.layer3, "1").left, "4")(layer3_1_left_3); layer3_1_left_3 = None

layer3_1_relu_scale_0 = self.layer3_1_relu_scale_0

layer3_1_relu_zero_point_0 = self.layer3_1_relu_zero_point_0

add_relu_5 = torch.ops.quantized.add_relu(layer3_1_left_4, add_relu_4, layer3_1_relu_scale_0, layer3_1_relu_zero_point_0); layer3_1_left_4 = add_relu_4 = layer3_1_relu_scale_0 = layer3_1_relu_zero_point_0 = None

layer4_0_left_0 = getattr(getattr(self.layer4, "0").left, "0")(add_relu_5)

layer4_0_left_1 = getattr(getattr(self.layer4, "0").left, "1")(layer4_0_left_0); layer4_0_left_0 = None

layer4_0_left_2 = getattr(getattr(self.layer4, "0").left, "2")(layer4_0_left_1); layer4_0_left_1 = None

layer4_0_left_3 = getattr(getattr(self.layer4, "0").left, "3")(layer4_0_left_2); layer4_0_left_2 = None

layer4_0_left_4 = getattr(getattr(self.layer4, "0").left, "4")(layer4_0_left_3); layer4_0_left_3 = None

layer4_0_shortcut_0 = getattr(getattr(self.layer4, "0").shortcut, "0")(add_relu_5); add_relu_5 = None

layer4_0_shortcut_1 = getattr(getattr(self.layer4, "0").shortcut, "1")(layer4_0_shortcut_0); layer4_0_shortcut_0 = None

layer4_0_relu_scale_0 = self.layer4_0_relu_scale_0

layer4_0_relu_zero_point_0 = self.layer4_0_relu_zero_point_0

add_relu_6 = torch.ops.quantized.add_relu(layer4_0_left_4, layer4_0_shortcut_1, layer4_0_relu_scale_0, layer4_0_relu_zero_point_0); layer4_0_left_4 = layer4_0_shortcut_1 = layer4_0_relu_scale_0 = layer4_0_relu_zero_point_0 = None

layer4_1_left_0 = getattr(getattr(self.layer4, "1").left, "0")(add_relu_6)

layer4_1_left_1 = getattr(getattr(self.layer4, "1").left, "1")(layer4_1_left_0); layer4_1_left_0 = None

layer4_1_left_2 = getattr(getattr(self.layer4, "1").left, "2")(layer4_1_left_1); layer4_1_left_1 = None

layer4_1_left_3 = getattr(getattr(self.layer4, "1").left, "3")(layer4_1_left_2); layer4_1_left_2 = None

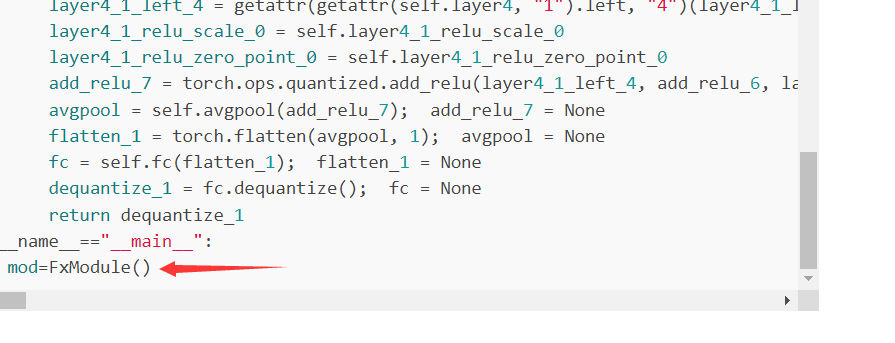

layer4_1_left_4 = getattr(getattr(self.layer4, "1").left, "4")(layer4_1_left_3); layer4_1_left_3 = None

layer4_1_relu_scale_0 = self.layer4_1_relu_scale_0

layer4_1_relu_zero_point_0 = self.layer4_1_relu_zero_point_0

add_relu_7 = torch.ops.quantized.add_relu(layer4_1_left_4, add_relu_6, layer4_1_relu_scale_0, layer4_1_relu_zero_point_0); layer4_1_left_4 = add_relu_6 = layer4_1_relu_scale_0 = layer4_1_relu_zero_point_0 = None

avgpool = self.avgpool(add_relu_7); add_relu_7 = None

flatten_1 = torch.flatten(avgpool, 1); avgpool = None

fc = self.fc(flatten_1); flatten_1 = None

dequantize_1 = fc.dequantize(); fc = None

return dequantize_1

if __name__=="__main__":

mod=FxModule()