loss = nn.CrossEntropyLoss()

input = torch.randn(3, 5, requires_grad=True)

target = torch.empty(3, dtype=torch.long).random_(5)

output = loss(input, target)

output.backward()

This is an example of nn.crossentropy loss from Pytorch docs.

https://pytorch.org/docs/stable/nn.html#torch.nn.CrossEntropyLoss

This is run on torch 0.1.5 version and shows no grad_fn error

I assume you are running this code in PyTorch 1.5.0 (not 0.1.5)?

This code snippet should work and works for me in this version.

Could you post the error message as well as the output of print(torch.__version__), please?

I am doing this on colab, when i run this snippet on my system with torch 1.4.0 it runs correctly.

Could you add print(output) before calling output.backward() and post it here?

You can add code snippets by wrapping them into three backticks ```, which makes debugging easier.

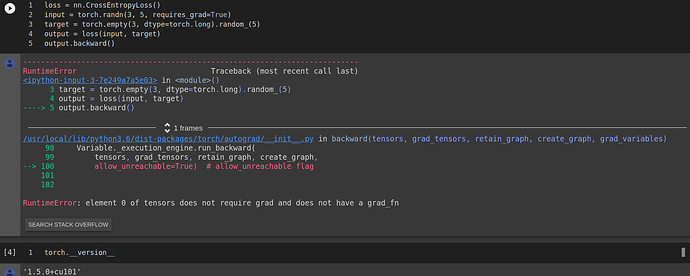

loss = nn.CrossEntropyLoss()

input = torch.randn(3, 5, requires_grad=True)

target = torch.empty(3, dtype=torch.long).random_(5)

output = loss(input, target)

print(output)

output.backward()

tensor(1.6685)

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-5-835ec6ff9004> in <module>()

4 output = loss(input, target)

5 print(output)

----> 6 output.backward()

1 frames

/usr/local/lib/python3.6/dist-packages/torch/autograd/__init__.py in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables)

98 Variable._execution_engine.run_backward(

99 tensors, grad_tensors, retain_graph, create_graph,

--> 100 allow_unreachable=True) # allow_unreachable flag

101

102

RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn

Sorry, no idea what might be going on.

Could you restart the kernel or are you running in a clean environment?

I would recommend to create a new virtual environment, reinstall PyTorch and rerun the script.

I am running on google colab, I only installed Transformers nothing else, maybe you can try it on google colab, same thing happening on kaggle notebooks also

Hey sorry I got it, in my code there was torch.set_grad_enabled(False); this line, I was struggling with my custom loss function for a day for this error:( . I tried this example to see if same error comes up.

Oh OK, so the gradient calculation was disabled globally. I’m glad you figured it out.

1 Like