Hi,

After converting my program into mixed-precision using amp, the forward time gets shorter while the backward time gets longer when I record running time by “import time”.

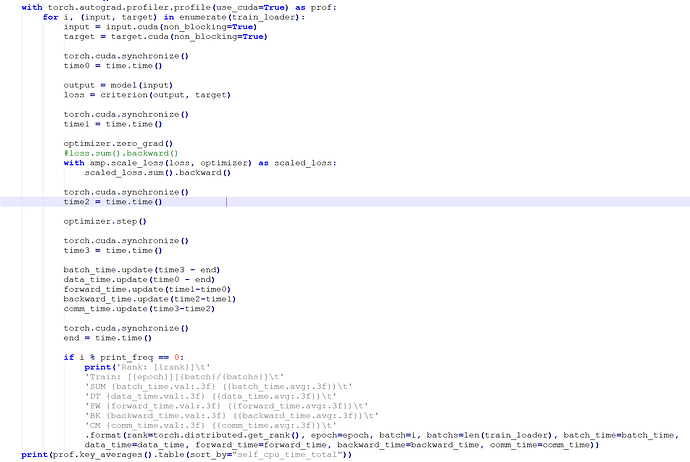

Then I use “torch.profiler” to record the running time.

However, it seems that the result of torch.profiler meets my expectation: cuda.time becomes around 1/4 of the fp32.

Here my question is: what is the cuda.time means and why the backpropagation time becomes longer in mix precision?

mixed-precision:

import time :

Entire Epoch: [1] Train: [0] SUM: 1828.379 DT: 13.270 FW: 71.987 BK: 1711.248 CM: 31.873

torch profiler:

Self CPU time total: 56.883s

CUDA time total: 893.112s

FP32:

import time :

Entire Epoch: [3] Train: [0] SUM: 1584.260 DT: 15.714 FW: 368.550 BK: 1119.231 CM: 80.766

torch profiler:

Self CPU time total: 105.049s

CUDA time total: 3021.355s

Detailed Logs of my program are listed below:

http://49.234.107.127:81/index.php/s/qa3Yjo8WJwNZjCS (mixed precision)

http://49.234.107.127:81/index.php/s/y8SpyfiM3d5SZp7

My model uses conv3d and I run my code on Tesla V100.

Many thanks.