Heyhey

I’m training a simple resnet18 model on two gpus, having used DataParallel to wrap the model. I noticed that there was barely any speed increase when switching from 1 to two gpus, so I did some profiling:

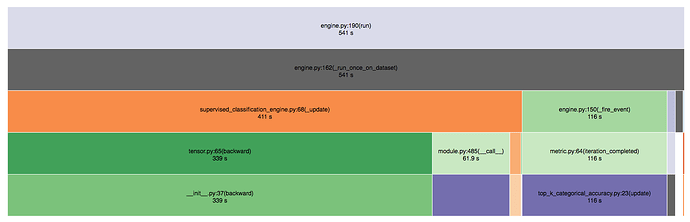

Looks like 1 cuplrit is the loss function; problem in Ignite which will be fixed

But the other thing is that the forward pass looks like it takes 60 seconds, where the backward takes 330 seconds. So the backward pass is almost 5.5x slower than the forward, almost as if it’s not ran on 2 gpus but only uses one.

When running the same model one one gpu and profiling it, the backward pass is less than 3x slower than the forward pass (30s vs. 81s.)

Anyone have any idea what’s going on?