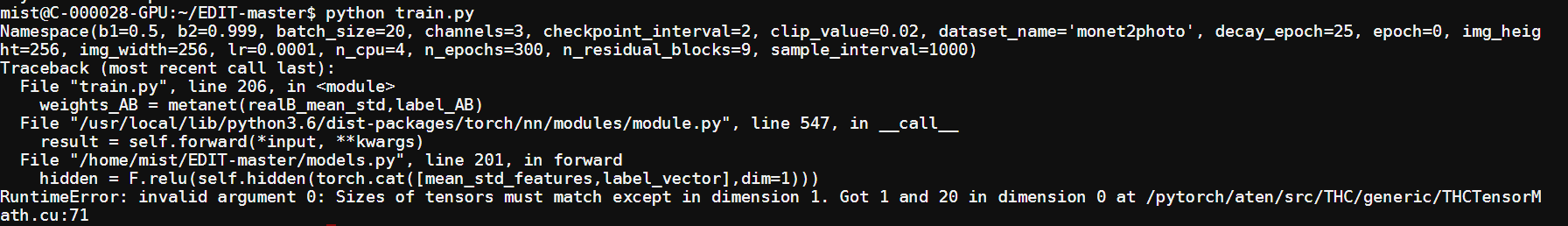

I change the batchsize from 1 to 20, some errors caused.

I guess the reason is that the batchsize cannot be divisible by the size of dataloader, how can I fix it?

Related code in train.py and models.py:

for epoch in range(opt.epoch, opt.n_epochs):

for i, batch in enumerate(dataloader):

# Set model input

real_A = Variable(batch['A'].type(Tensor))

real_B = Variable(batch['B'].type(Tensor))

label_AB = Variable(batch['label_AB'].type(Tensor))

label_BA = Variable(batch['label_BA'].type(Tensor))

label = batch['label_name']

real_A_features = vgg16(real_A)

real_B_features = vgg16(real_B)

realA_mean_std = mean_std(real_A_features)

realB_mean_std = mean_std(real_B_features)

weights_AB = metanet(realB_mean_std,label_AB) #line206 in train.py

weights_BA = metanet(realA_mean_std,label_BA)

def forward(self, mean_std_features,label):

#mean_std_features = mean_std_features.view(-1,mean_std_features.shape[0] * mean_std_features.shape[1])

#label_vector = torch.mean(label,dim=2).view(1,-1)

index0 = torch.cuda.FloatTensor([torch.mean(label[0][0])])

index1 = torch.cuda.FloatTensor([torch.mean(label[0][1])])

index2 = torch.cuda.FloatTensor([torch.mean(label[0][2])])

index3 = torch.cuda.FloatTensor([torch.mean(label[0][3])])

#index4 = torch.cuda.FloatTensor([torch.mean(label[0][4])])

label_vector = torch.cat((index0,index1,index2,index3)).view(1,-1)

hidden = F.relu(self.hidden(torch.cat([mean_std_features,label_vector],dim=1))) #line201 in models.py

filters = {}

for name, i in self.fc_dict.items():

fc = getattr(self, 'fc{}'.format(i + 1))

filters[name] = fc(hidden[:, i * 128:(i + 1) * 128])

return filters