I’m trying to predict a multilabel multiclass output from a series of image features (i.e. panoramic images passed through Resnet-152 giving a tensor of BATCH_SIZE X NUM_IMAGES X 36 (NUM_VIEWS) x 2048 (FEATURE_SIZE)). The output of the model is BATCH_SIZE X NUM_CLASSES (~3000). Note that there is a heavy imbalance in the classes. One series of images may have multiple objects in it, so I used BCE loss (with logits).

CV is not my specialty. Below is the model I’ve been using (which may be part of the problem). There is an assumption that one particular image contributes to the output vector, which is why Conv1D was used (I wasn’t sure how to process only one set of panoramic images at a time) and the outputs of each convolution (all of CLASS_SIZE) are max pooled together.

class LandMarkPredictionModule(nn.Module):

def __init__(self, num_classes, num_views=36, kernel_size = 4):

super(LandMarkPredictionModule, self).__init__()

self.num_views = num_views

self.kernel_size = kernel_size

self.num_classes = num_classes

self.conv = torch.nn.Conv1d(in_channels = self.num_views, out_channels= 1, kernel_size = self.kernel_size) # figure out

self.fc = nn.Linear(in_features = 2045, out_features = self.num_classes) # How to calculate this value automatically

self.act_func = nn.Sigmoid()

def forward(self, images):

batch_size, length, num_views, feat_size = images.shape

reshaped_images = images.reshape(batch_size * length, num_views, feat_size)

convolved_images = self.conv(reshaped_images).squeeze(1)

output = self.act_func(self.fc(convolved_images))

output = output.reshape(batch_size,length, self.num_classes)

return output.max(dim=1)[0]

The loss stalls out at ~0.69, even in a case where I try to over fit to just 1 data point. Below is some example code and the data used.

import torch.optim as optim

pred_module = LandMarkPredictionModule(len(landmark_classes))

criterion = nn.BCEWithLogitsLoss()

optimizer = optim.Adam(pred_module.parameters(), lr=1e-3)

# USE BCE LOSS

# Manage class imbalances

NUM_EPOCHS = 100

tst_images = torch.from_numpy(np.stack((image_class_pairs[0][0],image_class_pairs[3][0]),axis=0))

tst_labels = torch.from_numpy(np.stack((image_class_pairs[0][1],image_class_pairs[3][1]),axis=0))

for epoch in range(NUM_EPOCHS):

optimizer.zero_grad()

logits = pred_module(tst_images)

loss = criterion(logits,tst_labels)

loss.backward()

print(loss.item())

optimizer.step()

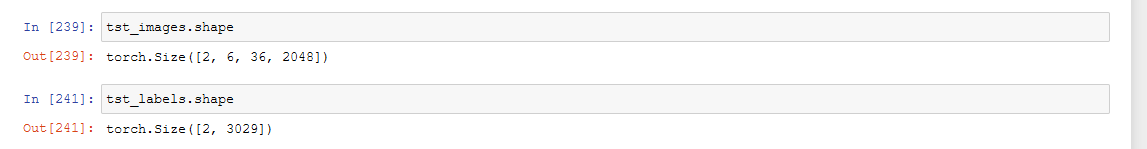

The sizes of the inputs and outputs above are:

I’m in the process of experimenting with the model, but beyond that what else could cause the loss to stay stuck (Data, Bug in the Code)?