import torch

import torch.nn as nn

import helpers.dataset as dataset

from torch.autograd import Variable

input_size = 128

hidden_size = 128

num_layers = 2

output_size = 128

batch_size = 1

num_epochs = 2

learning_rate = 0.01

# Dataset

train_dataset = dataset.pianoroll_dataset_batch('./datasets/training/piano_roll_fs1') # pianoroll_dataset_batch instance

# Data loader

train_loader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=False, drop_last=True)

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, num_classes=128, n_layers=num_layers):

super(RNN, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.num_classes = num_classes

self.n_layers = n_layers

self.rnn = nn.RNN(input_size=input_size, hidden_size=hidden_size, num_layers=n_layers, batch_first=False)

def forward(self, input_sequence, hidden):

# Output shape=(seq_length, batch_size, hidden_size)

output, hidden = self.rnn(input_sequence, hidden)

# output = output.reshape(-1, self.num_classes) #Predict which classes should be pressed

return output, hidden

model = RNN(input_size, hidden_size, num_layers)

# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

# Init hidden state with shape=(num_layers*num_directions, batch_size, hidden_size)

hidden = Variable(torch.Tensor(num_layers*1, batch_size, hidden_size))

for i, (features, _, targets) in enumerate(train_loader):

# Seconds in each input stream

seq_len = features.size(1)

# The input dimensions are (seq_len, batch, input_size)

features = Variable(features.reshape(seq_len, -1, input_size))

targets = Variable(targets.reshape(seq_len, hidden_size))

# Forward pass

outputs, hidden = model(features, hidden)

loss = criterion(outputs, targets)

# Backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

print('i: {}, Loss: {:.4f}'.format(i+1, loss.item()))

if i % 10 == 0:

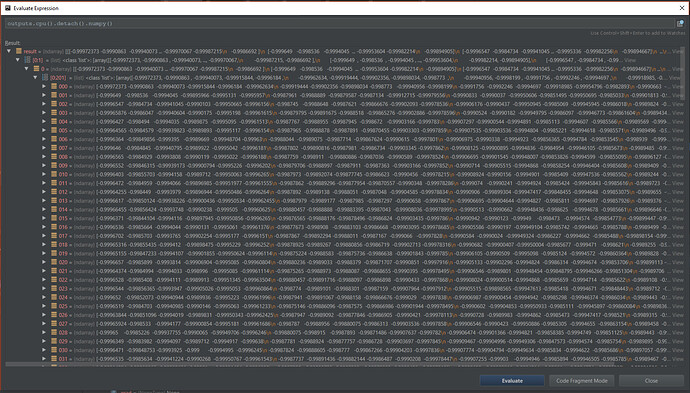

print(outputs)

I’m trying to train a model which learns how to play piano based on some MIDI files (which is handled by the helper class which inherits from torch’s Dataset). I’ve watched countless posts to try to debug my errors, but I’m left with even more questions.

- Should I need to reshape the targets?

- I keep gettings this error (in the for-loop under “Forward pass”):

<class ‘tuple’>: (<class ‘RuntimeError’>, RuntimeError(“Expected object of type torch.LongTensor but found type torch.FloatTensor for argument #2 ‘target’”,), None)

I’ve tried debugging and inspecting both outputs and targets show that both have the same type (torch.float32). Converting targets to .long() just produces another error:

<class ‘tuple’>: (<class ‘RuntimeError’>, RuntimeError(“Assertion `cur_target >= 0 && cur_target < n_classes’ failed. at c:\programdata\miniconda3\conda-bld\pytorch_1533096106539\work\aten\src\thnn\generic/SpatialClassNLLCriterion.c:110”,), None)

- The dataset I’m using has a piano with 128 keys (so 128 classes). Where do I define the output layer?

- When I define any other hidden_size than 128 (same as input_size) I get an error saying that the dimensions isn’t right, what am I missing here?

- The piano matrix which is fed in has binary values, how do I obtain the same format in the final layer (and not a bunch of floats) while maintaining the multi-class property (multiple keys can be pressed at the same time)? Is outputs.long() on the last layer the right way to do it, or should I use some other loss function which has this built-in?

I apologize for the beginner questions, but I’m new to this and watching tutorials and reading countless of posts has only helped me so much, so I’d appreciate anyone who takes the time to explain this so I can fill in the gaps.