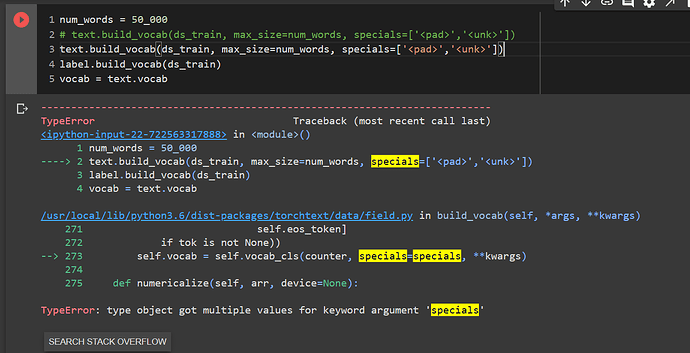

text.build_vocab(ds_train, max_size=num_words, specials=[‘’,‘’])

Full code: NYU-DLSP20/15-transformer.ipynb at master · Atcold/NYU-DLSP20 · GitHub

Pytorch version: 1.6.0+cu101

If you don’t mind, I want to ask how to do a mask token in it. I see some transformer model that is masking some word and fill it in later.