Problem

I am trying to understand how RNN, GRU and LSTM work. I saw a LSTM implementation by just using Numpy here. But it is too time-consuming to go over all those details since I currently just want to understand the algorithmic workflow of those modules (not how we calculate gradient, etc). So I would like to build those modules from PyTorch blocks like nn.Linear().

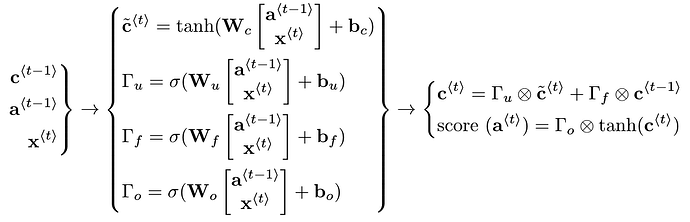

This is a LSTM diagram, which looks simple.

However, as easy as it might sound, I am not sure

- How should I carry the cell state and hidden state along different time step.

- Whether the models constructed this way could backprop correctly.

- Is there any standardized dataset I could use to check my implementation is correct.

Could anyone help me? Thank you in advance!