I am having problems with this:

# hidden features

n_hidden = 256

# input activation factor

n_fac = 42

# batch size

bs = 512

class Model007(nn.Module):

def __init__(self, vocab_size, n_fac):

super().__init__()

self.l1 = nn.Embedding(vocab_size, n_fac)

self.l2 = nn.Linear(n_fac, n_hidden)

def forward(self, c1, c2, c3):

print("c1", c1)

print("c2", c2)

print("c3", c3)

in1 = torch.relu(self.l2(self.l1(c1)))

in2 = torch.relu(self.l2(self.l1(c2)))

in3 = torch.relu(self.l2(self.l1(c3)))

return in1 + in2 + in3

x1 = np.array([13,3,28,24,33,2,3,62,47,58])

x2 = np.array([15,21,30,27,17,3,3,54,54,44])

x3 = np.array([32,2,27,19,2,3,32,3,60,47])

x = np.stack([x1,x2,x3], axis=1)

y = np.array([3,28,24,33,2,3,62,47,58,54])

# converting to tensor

X = torch.from_numpy(x).cuda()

Y = torch.from_numpy(y).cuda()

print(X)

print(Y)

print("...")

ds = utils.TensorDataset(X, Y)

dl = utils.DataLoader(ds, batch_size=2, shuffle=False)

print(ds.tensors)

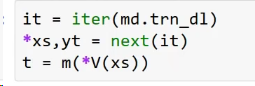

it = iter(dl)

# mb mini bach, yt is target

mb, yt = next(it)

print(mb)

m = Model007(vocab_size, n_fac).cuda()

y_hat = m(mb)

print(y_hat)

The error I am getting is like this:

TypeError: forward() missing 2 required positional arguments: 'c2' and 'c3'