When I fine tune VGG in Stanford Dog Breed Dataset,I found different batch_size cause loss different.

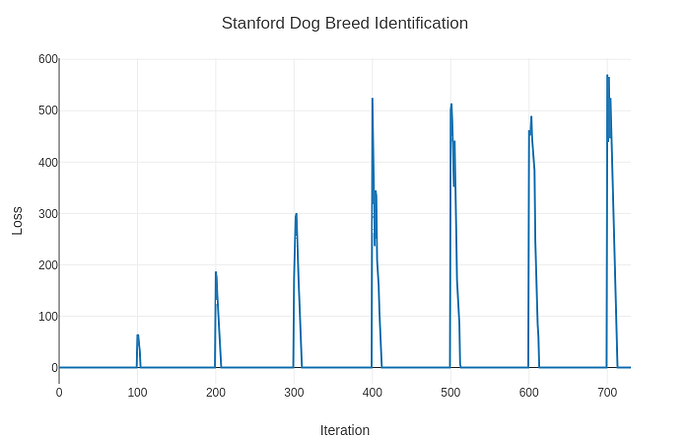

for example, when I set batch_size = 1,the loss plot looks like this:

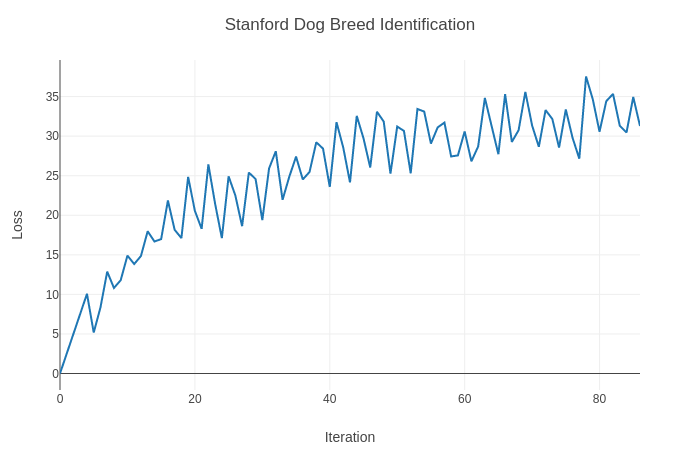

but when I set batch_size = 32, the loss plot seems normal and looks like this:

what is the reason of this phenomena?