While implementing some of the paper from segmentation, I found it weird when changing conv2d parameters.

First I tried using .parameter to change parameters of conv2d, but this didn’t work and made strange results.

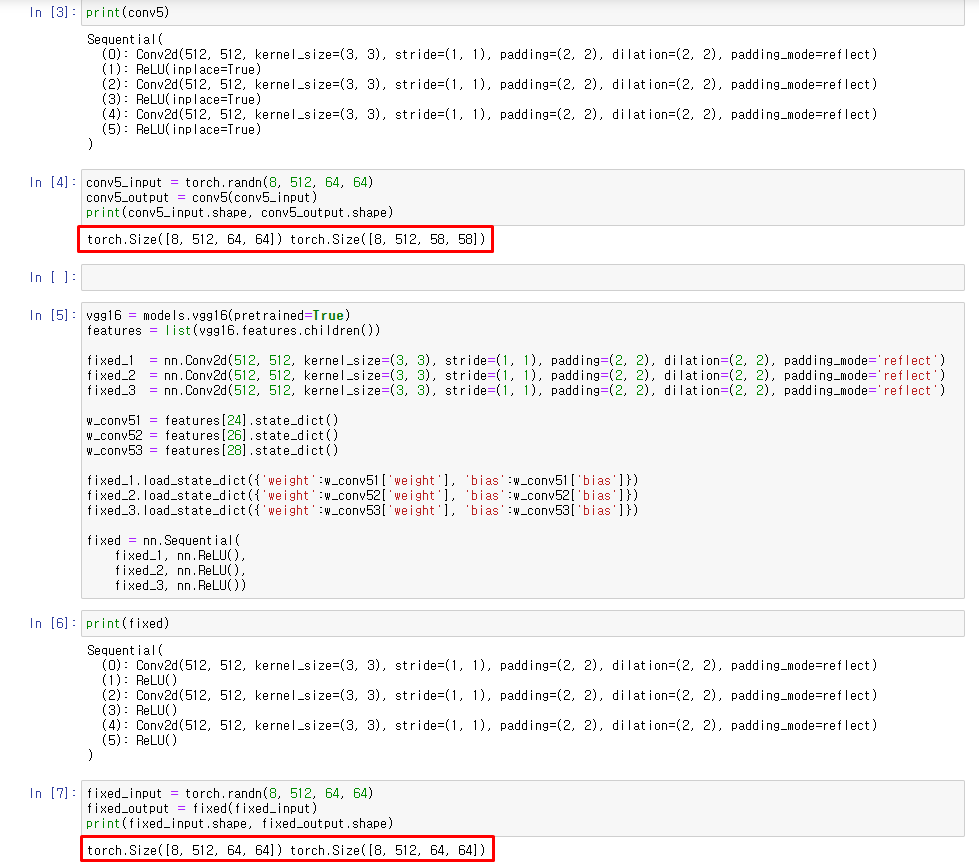

Code

import torch

import torch.nn as nn

import torchvision.models as models

vgg16 = models.vgg16(pretrained=True)

features = list(vgg16.features.children())

conv5 = nn.Sequential(*features[24:30])

for i in range(len(conv5)):

if isinstance(conv5[i], nn.Conv2d):

conv5[i].dilation = (2, 2)

conv5[i].padding = (2, 2)

conv5[i].padding_mode = 'reflect'

conv5_input = torch.randn(8, 512, 64, 64)

conv5_output = conv5(conv5_input)

print(conv5_input.shape, conv5_output.shape)

Result

torch.Size([8, 512, 64, 64]) torch.Size([8, 512, 58, 58])

I googled a lot and I finally solved by loading state_dict and this worked

Code

import torch

import torch.nn as nn

import torchvision.models as models

vgg16 = models.vgg16(pretrained=True)

features = list(vgg16.features.children())

fixed_1 = nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), padding_mode='reflect')

fixed_2 = nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), padding_mode='reflect')

fixed_3 = nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), padding_mode='reflect')

w_conv51 = features[24].state_dict()

w_conv52 = features[26].state_dict()

w_conv53 = features[28].state_dict()

fixed_1.load_state_dict({'weight':w_conv51['weight'], 'bias':w_conv51['bias']})

fixed_2.load_state_dict({'weight':w_conv52['weight'], 'bias':w_conv52['bias']})

fixed_3.load_state_dict({'weight':w_conv53['weight'], 'bias':w_conv53['bias']})

fixed = nn.Sequential(

fixed_1, nn.ReLU(),

fixed_2, nn.ReLU(),

fixed_3, nn.ReLU())

fixed_input = torch.randn(8, 512, 64, 64)

fixed_output = fixed(fixed_input)

print(fixed_input.shape, fixed_output.shape)

Result

torch.Size([8, 512, 64, 64]) torch.Size([8, 512, 64, 64])

I have 2 questions for this example. (I googled, but I cannot find what I really want…)

-

Why first trial (.parameter to change conv2d layers with weight preserved) didn’t work and made strange result? (the network details are same when I print each network)

-

Is there more cool way to change conv2d parameters with preserving the weight? (more better way than what I did on final)