how do I change predicted output pixel value which lies between 0 to 1 to binary values 0 or 1 for each pixel in semantic segmentation?

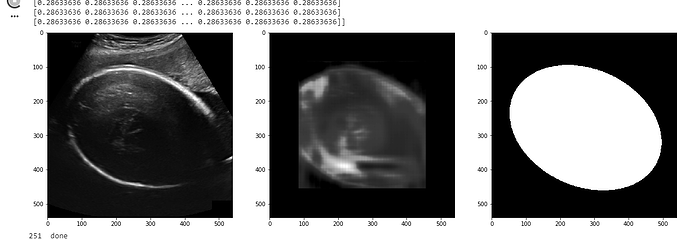

This is how my input, predicted and label image looks like. I want to make the predicted image into black & white.

I am implementing U-net code

My training code:

for epoch in tqdm_notebook(range(num_epochs)):

total_train = 0

correct_train = 0

epoch_loss = 0

DICE = 0

for k,train_img in enumerate(trainLoader):

inputs,labels = train_img

inputs = inputs.cuda();

inputs = inputs.unsqueeze_(0)

inputs = inputs.reshape(batch_size_train,1,img_width,img_height)

labels = labels.unsqueeze_(0)

labels = labels.reshape(batch_size_train,1,img_width,img_height)

model.train(mode=True)

# Forward pass

optimizer.zero_grad() # zeroes the gradient buffers of all parameters

inputs = inputs.cuda()

labels = labels.cuda()

outputs = model(inputs) # outputs.shape =(batch_size, n_classes, img_cols, img_rows)

# inputs = inputs.cuda();

labels = labels.permute(0, 2, 3, 1) # labels.shape =(batch_size, img_cols, img_rows, n_classes)

outputs = outputs.permute(0, 2, 3, 1) # outputs.shape =(batch_size, img_cols, img_rows, n_classes)

m = outputs.shape[0] # m = batch size

width_out = outputs.shape[2]

height_out = outputs.shape[1]

outputs_new = outputs.resize(m*width_out*height_out,1) # Resizing the outputs and label to calculate pixel wise softmax loss

labels_new = labels.resize(m*width_out*height_out,1)

loss = criterion(outputs_new,labels_new)

epoch_loss += loss.item()

loss.backward() # Backward and optimizeuns

optimizer.step() # update gradients

DICE += dice_coeff(outputs[:,:,:,0], labels[:,:,:,0]).item()

labels_new = labels_new.to(dtype=torch.long)

_, predicted = torch.max(outputs_new.data, 1)

total_train += labels_new.nelement() #.nelement returns total no. of elements in input tensor(here it is pixel)

correct_train += predicted.eq(labels_new.squeeze().data).sum().item()

train_accuracy = 100 * correct_train / total_train

if k % 50 == 0:

inputs = inputs.cpu().numpy()

labels = labels.cpu().detach().numpy()

outputs = outputs.cpu().detach().numpy()

f = plt.figure(figsize =(20,20))

f.add_subplot(1,3,1)

plt.imshow( inputs[0,0,:,:] ,cmap = 'gray')

f.add_subplot(1,3,2)

plt.imshow(outputs[0,:,:,0] ,cmap='gray')

f.add_subplot(1,3,3)

plt.imshow( labels[0,:,:,0] ,cmap='gray')

plt.show()

if k ==1:

break

if (epoch % 1 == 0):

print('Epoch [{}/{}],Loss: {:.4f},DICE:{:.4f} '.format(epoch+1,num_epochs,(epoch_loss / k),DICE / (k + 1)),"Training Accuracy: %d %%" % (train_accuracy))

print()

#

also, do I need to use hot encoder vector method to solve this problem.