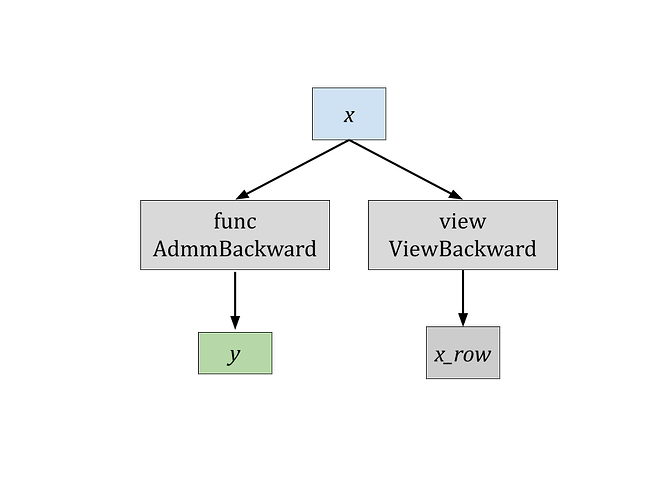

I want to compute the gradient between an input and an output. However, when I change the view of the input I get an error message

RuntimeError: One of the differentiated Tensors appears to not have been used in the graph. Set allow_unused=True if this is the desired behavior.

What I’ve tried is

import torch

func = torch.nn.Linear(5, 6)

x = torch.rand(3, 5, requires_grad=True)

for x_row in x.unbind(0):

y_row = func(x_row)

torch.autograd.grad(y_row, [x_row], grad_outputs=torch.ones_like(y_row), create_graph=True)[0]

# This is fine

y = func(x)

for x_row, y_row in zip(torch.split(x, 1, dim=0), torch.split(y, 1, dim=0)):

torch.autograd.grad(y_row, [x_row], grad_outputs=torch.ones_like(y_row), create_graph=True)[0]

# RuntimeError

y = func(x)

for x_row, y_row in zip(x.unbind(0), y.unbind(0)):

torch.autograd.grad(y_row, [x_row], grad_outputs=torch.ones_like(y_row), create_graph=True)[0]

# RuntimeError

x_row = torch.rand(1, 5, requires_grad=True)

x_row = x_row.flatten()

y_row = func(x_row)

torch.autograd.grad(y_row, [x_row], grad_outputs=torch.ones_like(y_row), create_graph=True)[0]

# This is fine

x_row = torch.rand(1, 5, requires_grad=True)

y_row = func(x_row)

x_row = x_row.flatten()

y_row = y_row.flatten()

torch.autograd.grad(y_row, [x_row], grad_outputs=torch.ones_like(y_row), create_graph=True)[0]

# RuntimeError

How am I supposed to do this?