Based on this post here I am running some tests to check if the framework has no error/bug.

1.

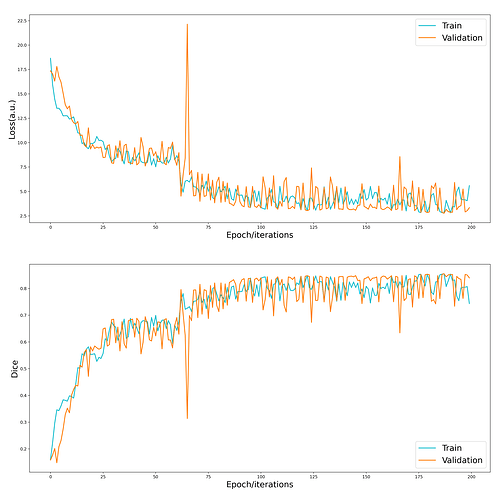

I created a train dataset with one single data point(3D image and mask) to overfit the U-Net. The validation set is also the same data point. After training for 200 epochs I got the following curves.

Both train and validation dice score seems to reach 80%(I expected it to be 100%). And validation loss curve follows the training curve very closely.

2.

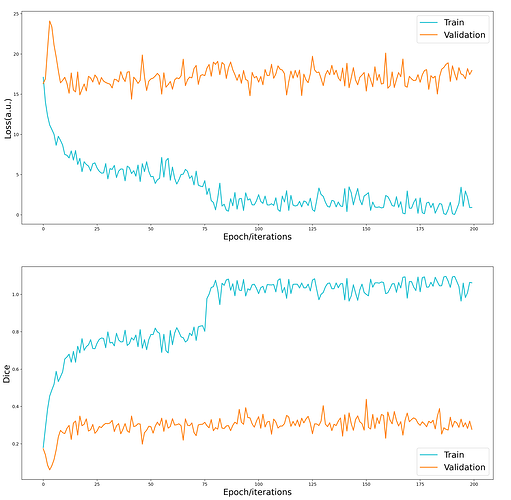

With the same loss, learning rate, and weight initialization, I changed the validation set with completely different image patches. The result:

The validation curves seem to behave in a random way(as it should) and for some reason the dice go beyond 1 in train samples(all samples are the same image/mask).

The dice overshoot may be due to the MSDL(multi-sourced dice loss) I have used(which doesn’t take background into account).

From these tests’ results, is it confirmed that the current framework works? Are there any other checks that need to be performed?

If the setup is good, shouldn’t it overfit in the first case or dice metric reaching nearly 1?