Hi,

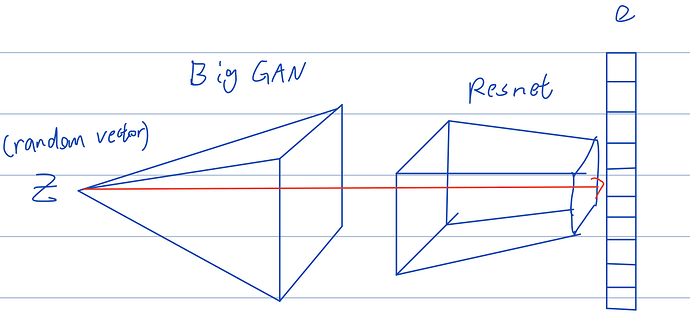

I am currently working on concatenate two pretrained models (BigGAN128 + Resnet18) together. The idea of how to concatenate them is shown in the drawing. The problem is that the output of BigGAN is a tensor of size[1,3,128,128], while resnet only takes input of size [1,3,224,224]. I’ve searched online and found that I can resize the tensor by using nn.function.interpolate(). My question is how can I add this as a layer in my customized model, or there would be other way than interpolate to do the resize work in torch.nn?

Any help and suggestions would be appreciated.

what I’ve done:

import torchvision.models as models

import torch

import torch.nn as nn

import logging

from pytorch_pretrained_biggan import (BigGAN, one_hot_from_names, truncated_noise_sample,

save_as_images, display_in_terminal)resnet = models.resnet18(pretrained=True)

#load the generator of pretrained biggan128

logging.basicConfig(level=logging.INFO)

#Load pre-trained model tokenizer (vocabulary)

GAN_model = BigGAN.from_pretrained(‘biggan-deep-128’)

final_model=nn.Sequential(GAN_model,resnet)