I’m not sure what soft parameter sharing refers to. Could you give me an example how the workflow would look like?

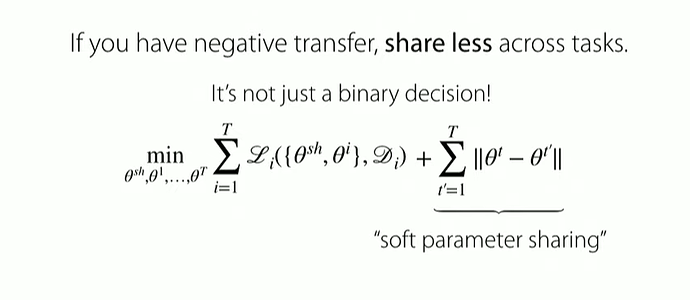

The set-up I had in mind comes from the multi-task learning literature where one trains several models at the same time with a regularization term that encourages the weights of each model to be similar.

I took the following figure from Chelsea Finn’s Stanford CS330 class https://www.youtube.com/watch?v=6stKGH6zI8g&list=PLoROMvodv4rMC6zfYmnD7UG3LVvwaITY5&index=3&t=2434s at the (47:50) mark.

While the above figure suggests comparing the weights of each model to that over every other model, I believe it is appropriate to simply compare the weights of each model to the average weights of the models (where the average is taken across all models).

Thanks

IT seems that the “soft parameter sharing” could be implemented as a regularization term.

You could follow this post, which gives you a general way to add a regularization term to your loss.

In your use case you would either have to calculate the regularization terms for all models or use the “average approach”.

Hi! Did this work for you? For me it didn’t and I’m wondering why not. I had to set p.requires_grad = False for every p in modelA.parameters() before instantiating myensamble = MyEnsamble() like this:

…

modelA = ModelA()

modelA.load_state_dict(torch.load(PATH))

for p in modelA.parameters():

p.requires_grad = False

modelB = ModelB()

myensamble = MyEnsamble(modelA, modelB)

…

and then it worked without the "with torch.no_grad(): " line.

@ptrblck , does this merging seems correct ? the only thing that is bugging me, is that input shapes of both models is different, and so when I pad the input to pass it to other, the gradient is lost. Does this cause issues in dynamic graph/ back propagation using loss.backward( ) ?

class MergedModel(nn.Module):

def __init__(self):

super(MergedModel, self).__init__()

_resnet = resnet50(pretrained=True)

for param in _resnet.parameters():

param.requires_grad = False

_resnet.fc = nn.Linear(2048, 1000)

self.model1 = _resnet

self.norm = nn.BatchNorm1d(1000)

self.relu = nn.ReLU()

self.dropout = nn.Dropout(p=0.2)

_inceptionresnetv2 = inceptionresnetv2()

for param in _inceptionresnetv2.parameters():

param.requires_grad = False

_inceptionresnetv2.last_linear = nn.Linear(1536, 1000)

self.model2 = _inceptionresnetv2

self.fc_linear = nn.Linear(1000, 6)

def forward(self, x):

# model1 takes (3, 224, 224)

x1 = self.model1(x)

x2 = F.pad(x, pad=(37, 38, 37, 38))

# F.pad returns tensor with no grad

x2.requires_grad = True

# model2 takes (3, 299, 299)

x2 = self.model2(x2)

x1 = self.dropout(self.relu(self.norm(x1)))

x2 = self.dropout(self.relu(self.norm(x2)))

return self.fc_linear(x1+x2)

Here is the training loop that I am using (in case that matters)

from tqdm.autonotebook import tqdm

from copy import deepcopy

from sklearn.metrics import f1_score

import gc

n_epoch = 40

model.train()

epoch_losses = {

'val': [],

'train': []

}

epoch_accs = {

'val': [],

'train': []

}

best_acc = 0.0

# best_model = deepcopy(model.state_dict())

for epoch in range(n_epoch):

epoch_loss = 0.0

epoch_acc = 0.0

outputs = []

targets = []

print('=' * 25, '[Epoch:', epoch+1, '/', n_epoch, ']', '=' * 25)

# scheduler.step()

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_correct = 0.0

for data in tqdm(holidayDataloader[phase], position=0, leave=False):

img, label = data['img'], data['label']

img = img.to(device)

label = torch.tensor(label).to(device)

# print('shapes: ', img.shape, label.shape)

optimizer.zero_grad()

with torch.set_grad_enabled(phase=='train'):

# gc.collect()

outs = model(img)

preds = torch.argmax(outs, 1)

loss = criterion(outs, label)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * img.size()[0]

running_correct += torch.sum(preds == label.data)

if phase == 'val':

outputs.append(preds.cpu().detach().numpy())

targets.append(label.cpu().detach().numpy())

epoch_loss = running_loss / len(holidayDataloader[phase])

epoch_acc = running_correct.double() / len(holidayDataloader[phase])

print(f'[{phase}] => Acc: {epoch_acc :.2f} Loss: {epoch_loss :.2f}')

if phase == 'val':

outputs = np.concatenate(outputs)

targets = np.concatenate(targets)

print(f"[val] => f1-score {f1_score(outputs, targets, average='weighted') :.2f}")

epoch_losses[phase].append(epoch_loss)

epoch_accs[phase].append(epoch_acc)

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

# best_model = deepcopy(model.state_dict())

elif phase == 'train':

scheduler.step(epoch_loss)

F.pad will not detach the computation graph and if the inputs require gradients, the outputs will too.

In your example, you are passing the x input to F.pad, so I assume that the input to the model doesn’t require gradients (which is often the case).

Your code looks generally alright. I don’t know where inceptionresnetv2 is coming from and if .last_linear is the used attribute, but assume you’ve verified it already.

hi, @ptrblck I have approached your way in terms of combining two trained models.

class num_pytorch(nn.Module):

def __init__(self, input_size, num_h1, num_classes=1, init_weights=True):

super(num_pytorch, self).__init__()

self.input_size = input_size

self.num_h1 = num_h1

self.num_classes = num_classes

self.num_fc1 = torch.nn.Linear(self.input_size, self.num_h1)

self.fc2 = torch.nn.Linear(self.num_h1, self.num_classes)

if init_weights:

self._init_weights()

def forward(self, num_x):

num_x = self.num_fc1(num_x)

relu_act = F.relu(num_x)

output = self.fc2(relu_act)

return output

...

class img_pytorch(nn.Module):

def __init__(self, name, img_output, num_classes=1, head="fc", bn=False):

super(img_pytorch, self).__init__()

assert head in ["fc", "conv"], "classification head must be fc or conv"

self.img_features = self._get_conv_layers(name, bn=bn)

self.img_fc1 = self._get_fc_classifier(img_output)

self.fc2 = torch.nn.Linear(img_output, num_classes)

def forward(self, img_x):

img_x = self.img_features(img_x)

img_x = self.img_fc1(img_x)

output = self.fc2(img_x)

return output

...

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(2, 1)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

And the bottom part looks like this:

num_model = num_pytorch(9, 256)

img_model = img_pytorch("vgg13", 64)

num_model.load_state_dict(torch.load("num_model.pt"))

img_model.load_state_dict(torch.load("img_model.pt"))

BASE_MODEL = MyEnsemble(num_model, img_model)

When I ran I got this error:

Traceback (most recent call last):

File "combined.py", line 230, in <module>

y_pred = BASE_MODEL(x_img_batch, x_num_batch)

File "/home/us/anaconda3/envs/pytorch_updated/lib/python3.8/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "combined.py", line 152, in forward

x1 = self.modelA(x1)

File "/home/us/anaconda3/envs/pytorch_updated/lib/python3.8/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "combined.py", line 64, in forward

num_x = self.num_fc1(num_x)

File "/home/us/anaconda3/envs/pytorch_updated/lib/python3.8/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/us/anaconda3/envs/pytorch_updated/lib/python3.8/site-packages/torch/nn/modules/linear.py", line 91, in forward

return F.linear(input, self.weight, self.bias)

File "/home/us/anaconda3/envs/pytorch_updated/lib/python3.8/site-packages/torch/nn/functional.py", line 1676, in linear

output = input.matmul(weight.t())

RuntimeError: mat1 dim 1 must match mat2 dim 0

From this I could see that there is a dimension mismatch somewhere, but ain’t able to find it. I haven’t had any errors while training num_pytorch separately, however when combined num_x = self.num_fc1(num_x) line is causing the error. Could you help me out here? Thanks.

Based on the error message the shape mismatch is raised in the num_fc1 layer from modelA, which is initialized with 9 input features. Did you make sure x1 is in the shape [batch_size, 9]?

yes, indeed. It’s

x_img_batch torch.Size([64, 3, 224, 224]), x_num_batch torch.Size([64, 9]), y_batch torch.Size([64, 1])

Your model works fine with these input shapes, as seen here:

class num_pytorch(nn.Module):

def __init__(self, input_size, num_h1, num_classes=1, init_weights=True):

super(num_pytorch, self).__init__()

self.input_size = input_size

self.num_h1 = num_h1

self.num_classes = num_classes

self.num_fc1 = torch.nn.Linear(self.input_size, self.num_h1)

self.fc2 = torch.nn.Linear(self.num_h1, self.num_classes)

def forward(self, num_x):

num_x = self.num_fc1(num_x)

relu_act = F.relu(num_x)

output = self.fc2(relu_act)

return output

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(2, 1)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

model = MyEnsemble(num_pytorch(9, 256), num_pytorch(9, 256))

x = torch.randn(64, 9)

out = model(x, x)

Note that I had to remove the img_pytorch model, as it’s using undefined modules.

Thank you, as I also stated, when I use it separately, it works as expected, but when combined the error arises out of nothing. Can’t believe what’s wrong with it.

Could you post a complete code snippet including all missing layers, so that I could try to reproduce this issue?

class num_pytorch(nn.Module):

def __init__(self, input_size, num_h1, num_classes=1, init_weights=True):

super(num_pytorch, self).__init__()

self.input_size = input_size

self.num_h1 = num_h1

self.num_classes = num_classes

self.num_fc1 = torch.nn.Linear(self.input_size, self.num_h1)

self.fc2 = torch.nn.Linear(self.num_h1, self.num_classes) #

if init_weights:

self._init_weights()

def forward(self, num_x):

num_x = self.num_fc1(num_x)

relu_act = F.relu(num_x)

output = self.fc2(relu_act)

return output

def _get_fc_classifier(self, img_output):

return nn.Sequential(

nn.AdaptiveAvgPool2d((7, 7)),

nn.Flatten(),

nn.Linear(in_features=7 * 7 * 512, out_features=img_output, bias=True)

)

def _init_weights(self):

for module in self.modules():

if isinstance(module, nn.Conv2d):

nn.init.kaiming_normal_(module.weight.data, mode='fan_in', nonlinearity='relu')

elif isinstance(module, nn.BatchNorm2d):

nn.init.constant_(module.weight.data, 1)

nn.init.constant_(module.bias.data, 0)

elif isinstance(module, nn.Linear):

nn.init.normal_(module.weight.data, 0, 0.01)

nn.init.constant_(module.bias.data, 0)

class img_pytorch(nn.Module):

def __init__(self, name, img_output, num_classes=1, head="fc", bn=False):

super(img_pytorch, self).__init__()

assert head in ["fc", "conv"], "classification head must be fc or conv"

self.img_features = self._get_conv_layers(name, bn=bn)

self.img_fc1 = self._get_fc_classifier(img_output)

self.fc2 = torch.nn.Linear(img_output, num_classes) #

def forward(self, img_x):

img_x = self.img_features(img_x)

img_x = self.img_fc1(img_x)

output = self.fc2(img_x)

return output

def _get_conv_layers(self, name, bn=False):

cfg = config[name]

num_prev_channels = 3

layers = []

for layer in cfg:

if layer == "m":

layers.append(nn.MaxPool2d(kernel_size=2, stride=2))

else:

layers.append(nn.Conv2d(num_prev_channels, layer, kernel_size=3, stride=1, padding=1))

num_prev_channels = layer

if bn:

layers.append(nn.BatchNorm2d(layer))

layers.append(nn.ReLU(inplace=True))

return nn.Sequential(*layers)

def _get_fc_classifier(self, img_output):

return nn.Sequential(

nn.AdaptiveAvgPool2d((7, 7)),

nn.Flatten(),

nn.Linear(in_features=7 * 7 * 512, out_features=img_output, bias=True)

)

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(2, 1)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

EDIT

config = { "vgg13": [64, 64, "m", 128, 128, "m", 256, 256, "m", 512, 512, "m", 512, 512, "m"] }

config seems to be a global variable and is unfortunately undefined.

I have edited the previos reply, please feel free to request if there’s anythig you need

Thanks for the update. Your model is working properly with these shapes:

config = { "vgg13": [64, 64, "m", 128, 128, "m", 256, 256, "m", 512, 512, "m", 512, 512, "m"] }

class num_pytorch(nn.Module):

def __init__(self, input_size, num_h1, num_classes=1, init_weights=True):

super(num_pytorch, self).__init__()

self.input_size = input_size

self.num_h1 = num_h1

self.num_classes = num_classes

self.num_fc1 = torch.nn.Linear(self.input_size, self.num_h1)

self.fc2 = torch.nn.Linear(self.num_h1, self.num_classes) #

if init_weights:

self._init_weights()

def forward(self, num_x):

num_x = self.num_fc1(num_x)

relu_act = F.relu(num_x)

output = self.fc2(relu_act)

return output

def _get_fc_classifier(self, img_output):

return nn.Sequential(

nn.AdaptiveAvgPool2d((7, 7)),

nn.Flatten(),

nn.Linear(in_features=7 * 7 * 512, out_features=img_output, bias=True)

)

def _init_weights(self):

for module in self.modules():

if isinstance(module, nn.Conv2d):

nn.init.kaiming_normal_(module.weight.data, mode='fan_in', nonlinearity='relu')

elif isinstance(module, nn.BatchNorm2d):

nn.init.constant_(module.weight.data, 1)

nn.init.constant_(module.bias.data, 0)

elif isinstance(module, nn.Linear):

nn.init.normal_(module.weight.data, 0, 0.01)

nn.init.constant_(module.bias.data, 0)

class img_pytorch(nn.Module):

def __init__(self, name, img_output, num_classes=1, head="fc", bn=False):

super(img_pytorch, self).__init__()

assert head in ["fc", "conv"], "classification head must be fc or conv"

self.img_features = self._get_conv_layers(name, bn=bn)

self.img_fc1 = self._get_fc_classifier(img_output)

self.fc2 = torch.nn.Linear(img_output, num_classes) #

def forward(self, img_x):

img_x = self.img_features(img_x)

img_x = self.img_fc1(img_x)

output = self.fc2(img_x)

return output

def _get_conv_layers(self, name, bn=False):

cfg = config[name]

num_prev_channels = 3

layers = []

for layer in cfg:

if layer == "m":

layers.append(nn.MaxPool2d(kernel_size=2, stride=2))

else:

layers.append(nn.Conv2d(num_prev_channels, layer, kernel_size=3, stride=1, padding=1))

num_prev_channels = layer

if bn:

layers.append(nn.BatchNorm2d(layer))

layers.append(nn.ReLU(inplace=True))

return nn.Sequential(*layers)

def _get_fc_classifier(self, img_output):

return nn.Sequential(

nn.AdaptiveAvgPool2d((7, 7)),

nn.Flatten(),

nn.Linear(in_features=7 * 7 * 512, out_features=img_output, bias=True)

)

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(2, 1)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

num_model = num_pytorch(9, 256)

img_model = img_pytorch("vgg13", 64)

BASE_MODEL = MyEnsemble(num_model, img_model)

x_img_batch = torch.randn([64, 3, 224, 224])

x_num_batch = torch.randn([64, 9])

out = BASE_MODEL(x_num_batch, x_img_batch)

out.backward(torch.ones_like(out))

and no shape mismatch error is raised.

Thank you for your time. I have no idea what to do actually. There is nothing wrong in the code and still I can’t get around it

Since the posted code works fine, I guess there might be (small) differences between the code you shared here and the one you are using in your setup.

Could you compare both and check, if you are seeing the same issues using my code snippet?

your code is working just fine. I’ll inspect my code once again and come back to you

Thanks so much:D I am trying to use this code with densenet121 and vgg16 and I am getting errors.

RuntimeError: Expected 4 dimensional input for a 4 dimensional weight of 64,3,3,3 but got 1,4096 instead.

VGG16->

for param in model.parameters():

param.requires_grad = False

n_inputs = model.classifier[6].in_features

print(n_inputs)

print(model.classifier[6].out_features)

print(n_classes)

model.classifier[6] = nn.Sequential(

nn.Linear(n_inputs, 256), nn.ReLU(), nn.Dropout(0.2),

nn.Linear(256, n_classes), nn.LogSoftmax(dim=1))

and for Densenet121->

for param in model.parameters():

param.requires_grad = False

n_inputs = model.classifier.in_features

model.classifier = nn.Linear(n_inputs, 2)

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(4, 2)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

model_ensemb = MyEnsemble(model_vgg, model_dense)

x1, x2 = torch.randn(1, 256), torch.randn(1, 64)

output = model_ensemb(x1, x2)

I think there is a problem with out_features but I couldn’t find it. I am a beginner. Thanks for your response.