Hi Guys,

Here’s my problem I created two Classes and now want to train them parallel and Combined manner using another Class .

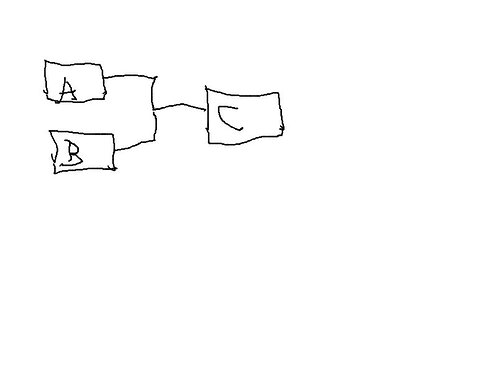

Parallel Manner Structure

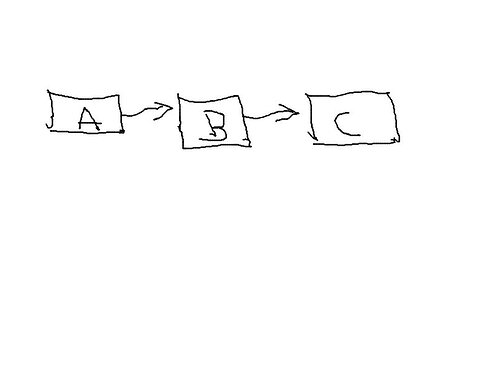

Stacked Manner Structure

class InceptionA(nn.Module):

def __init__(self):

super(InceptionA,self).__init__()

##Branch 1

self.conv1x1 = nn.Sequential(nn.Conv2d(in_channels=1,out_channels= 32 , kernel_size = 1),

nn.BatchNorm2d(32, eps=0.001),

nn.ReLU())

##Branch 2

self.conv1x1_1 = nn.Sequential(nn.Conv2d(in_channels=1,out_channels= 32 , kernel_size = 1),

nn.BatchNorm2d(32, eps=0.001),

nn.ReLU())

self.conv5x5 = nn.Sequential(nn.Conv2d(in_channels=32,out_channels= 64 , kernel_size = 5,padding =2),

nn.BatchNorm2d(64, eps=0.001),

nn.ReLU())

##Branch 3

self.conv1x1_2 = nn.Sequential(nn.Conv2d(in_channels=1,out_channels= 64 , kernel_size = 1),

nn.BatchNorm2d(64, eps=0.001),

nn.ReLU())

self.conv3x3 = nn.Sequential(nn.Conv2d(in_channels=64,out_channels= 128 , kernel_size = 3),

nn.BatchNorm2d(128, eps=0.001),

nn.ReLU())

self.conv3x3_1 = nn.Sequential(nn.Conv2d(in_channels=128,out_channels= 196 , kernel_size = 3,padding=2),

nn.BatchNorm2d(196, eps=0.001),

nn.ReLU())

##Branch 4

self.pooling = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.conv1x1_3 = nn.Sequential(nn.Conv2d(in_channels=1,out_channels= 64 , kernel_size = 1 ,padding = 0),

nn.BatchNorm2d(64, eps=0.001),

nn.ReLU())

def forward(self,x):

## 1st branch

inp1 = self.conv1x1(x)

## 2nd Branch

inp2 = self.conv1x1_1(x)

inp2 = self.conv5x5(inp2)

## 3rd Branch

inp3 = self.conv1x1_2(x)

inp3 = self.conv3x3(inp3)

inp3 = self.conv3x3_1(inp3)

## 4th Branch

inp4 = self.pooling(x)

inp4 = self.conv1x1_3(inp4)

output = [inp1,inp2,inp3,inp4]

out_1 = torch.cat(output,1)

return out_1

class InceptionB(nn.Module):

def __init__(self):

super(InceptionB,self).__init__()

## Branch 1

self.conv3x3 = nn.Sequential(nn.Conv2d(in_channels = 1,out_channels = 384,kernel_size = 3,stride =2,padding = 0),##13x13x64,

nn.BatchNorm2d(384, eps=0.001),

nn.ReLU())

## Branch 2

self.conv1x1 = nn.Sequential(nn.Conv2d(in_channels=1,out_channels=64,kernel_size=1,stride=1,padding=0),##28x28x64,

nn.BatchNorm2d(64, eps=0.001),

nn.ReLU())

self.conv3x3_1 = nn.Sequential(nn.Conv2d(in_channels=64,out_channels=96,kernel_size=3,stride=1,padding=1),##28x28x96

nn.BatchNorm2d(96, eps=0.001),

nn.ReLU())

self.conv3x3_2 = nn.Sequential(nn.Conv2d(in_channels=96,out_channels=128,kernel_size=3,stride=2,padding=0),

nn.BatchNorm2d(128, eps=0.001),

nn.ReLU())

## Branch 3

self.pooling = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)## 1*13*13

def forward(self,x):

inp1 = self.conv3x3(x)

inp2 = self.conv1x1(x)

inp2 = self.conv3x3_1(inp2)

inp2 = self.conv3x3_2(inp2)

inp3 = self.pooling(x)

output = [inp1,inp2,inp3]

out2 = torch.cat(output,1)

return out2

Presently My output From Class InceptionA is = torch.Size([128, 356, 28, 28]) where 128 is Batch Size.

and class InceptionB =torch.Size([128, 513, 13, 13])

Thanks in advance.!!!

Stay Safe.