Hi everyone!

I’m a newbie to pytorch and I want to find a pretty way to compare layers’ weights between two models.

I’m currently using an alexnet and I’m freezing all the layers exept for the convolutional layers.

net = alexnet(pretrained=True)

#freeze all the layers

for param in net.parameters():

param.requires_grad = False

# unfreeze only the fully connected layers

net.classifier[6] = nn.Linear(4096, NUM_CLASSES)

for val in net.classifier:

val.requires_grad = True

After the training phase, I save the best net (the one with the highest accuracy on the validation set) and creates some histograms with tensorboard.

basic_net = alexnet(pretrained=True)

tb = SummaryWriter(comment = "Compare Conv Layers")

j = 0

for i in [0,3,6,8,10]:

tb.add_histogram("Pretrained conv layer", basic_net.features[i].weight.data.cpu().numpy(), j)

j = j+1

tb.close

tb = SummaryWriter(comment = "Compare Conv Layers")

j = 0

for i in [0,3,6,8,10]:

tb.add_histogram("My conv layer", best_net_3_2_1.features[i].weight.data.cpu().numpy(), j)

j = j+1

tb.close

tb.flush()

With the index i I consider just the convolutional layers.

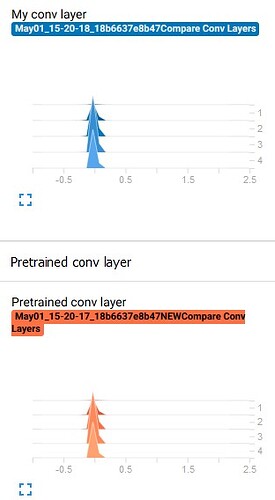

This is what I obtained in tensorboard, is there any better way to compare the weights?