I have created a conv autoencoder to generate custom images (Generated features can be used for clustering). But I am not able to generate the images, even the result is very bad. I am not able to understand what is this problem. Image size is 240x270 and is resized to 224x224

Autoencoder class is as follow

self.encoder = nn.Sequential(

nn.Conv2d(3, 16, 7, stride=3, padding=1), # b, 16, 10, 10

nn.ReLU(True),

nn.Conv2d(16, 32, 7, stride=3, padding=1), # b, 16, 10, 10

nn.ReLU(True),

nn.Conv2d(32, 64, 3, stride=2, padding=1), # b, 16, 10, 10

nn.ReLU(True),

nn.Conv2d(64, 128, 3, stride=2, padding=1) # b, 16, 10, 10

# nn.ReLU(True),

# nn.Conv2d(128, 256, 2, stride=5, padding=1) # b, 16, 10, 10

)

self.decoder = nn.Sequential(

# nn.ConvTranspose2d(256, 128, 2), # b, 16, 5, 5

# nn.ReLU(True),

nn.ConvTranspose2d(128, 64, 3,stride=2, padding=1), # b, 16, 5, 5

nn.ReLU(True),

nn.ConvTranspose2d(64, 32, 3,stride=2, padding=0), # b, 16, 5, 5

nn.ReLU(True),

nn.ConvTranspose2d(32, 16, 5, stride=3, padding=0), # b, 8, 15, 15

nn.ReLU(True),

nn.ConvTranspose2d(16, 8, 7, stride=3, padding=0,output_padding=1), # b, 1, 28, 28

nn.ReLU(True),

nn.ConvTranspose2d(8,3, 7, stride=1, padding=0), # b, 1, 28, 28

nn.Sigmoid()

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

Model Summary is as follow

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 74, 74] 2,368

ReLU-2 [-1, 16, 74, 74] 0

Conv2d-3 [-1, 32, 24, 24] 25,120

ReLU-4 [-1, 32, 24, 24] 0

Conv2d-5 [-1, 64, 12, 12] 18,496

ReLU-6 [-1, 64, 12, 12] 0

Conv2d-7 [-1, 128, 6, 6] 73,856

ConvTranspose2d-8 [-1, 64, 11, 11] 73,792

ReLU-9 [-1, 64, 11, 11] 0

ConvTranspose2d-10 [-1, 32, 23, 23] 18,464

ReLU-11 [-1, 32, 23, 23] 0

ConvTranspose2d-12 [-1, 16, 71, 71] 12,816

ReLU-13 [-1, 16, 71, 71] 0

ConvTranspose2d-14 [-1, 8, 218, 218] 6,280

ReLU-15 [-1, 8, 218, 218] 0

ConvTranspose2d-16 [-1, 3, 224, 224] 1,179

Sigmoid-17 [-1, 3, 224, 224] 0

================================================================

Total params: 232,371

Trainable params: 232,371

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 11.50

Params size (MB): 0.89

Estimated Total Size (MB): 12.96

----------------------------------------------------------------

Data Loader class is as follow

mean = torch.tensor((0.485, 0.456, 0.406)).reshape(1,3,1,1).cuda().requires_grad_(False)

std = torch.tensor((0.229, 0.224, 0.225)).reshape(1,3,1,1).cuda().requires_grad_(False)

def normalize(tensorInput, mean=mean, std=std, device=None):

return tensorInput.sub(mean.to(device)).div(std.to(device))

def denormalize(tensorInput, mean=mean, std=std, device=None):

return tensorInput.mul(std.to(device)).add(mean.to(device))

class DatasetLoader(torch.utils.data.Dataset):

def __init__(self, root, transforms=None):

self.root = root

self.transforms = transforms

self.imgs = list(sorted(os.listdir(root)))

def __getitem__(self, idx):

img_path = os.path.join(self.root, self.imgs[idx])

img=cv2.imread(img_path)

img=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

img = cv2.resize(img,(32,32)) / 255.0

img = np.transpose(img,[2,0,1]).astype(np.float32)

return torch.from_numpy(img)

def __len__(self):

return len(self.imgs)

Dataloading and train script is as follow

dataset = DatasetLoader('D:\images\Bright_240_270', get_transform(train=True))

torch.manual_seed(1)

indices = torch.randperm(len(dataset)).tolist()

dataset = torch.utils.data.Subset(dataset, indices[:-50])

dataset_test = torch.utils.data.Subset(dataset, indices[-50:])

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=batchSize, shuffle=True, num_workers=0)

data_loader_test = torch.utils.data.DataLoader(

dataset_test, batch_size=1, shuffle=False, num_workers=0)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = autoencoder().to(device)

summary(model, (3, 224, 224))

criterion = nn.MSELoss()

# criterion = nn.BCELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate,weight_decay=1e-5)

trainLoss=[]

total_loss = 0

data_iter = 0

for epoch in range(num_epochs):

data_iter = 0

for data in data_loader:

# print(data)

img = data.clone().detach().cuda()

# print("Min Value of input Image = ",torch.min(img))

# print("Max Value of input Image = ",torch.max(img))

img = Variable(img).cuda()

img= normalize(img)

# ===================forward=====================

output = model(img)

print(data.min(),data.max(),output.min().item(),output.max().item())

output = denormalize(output)

# print("Input Image shape = ",img.shape)

# print("Output Image shape = ",output.shape)

loss = criterion(output,data.cuda())

show_and_save_figure(data[0],output[0],'AutoEncoder')

# ===================backward====================

optimizer.zero_grad()

loss.backward()

optimizer.step()

data_iter=data_iter+1

if data_iter % 10 == 0:

print(f"Data Iteration = {data_iter}")

total_loss += loss.data

# ===================log========================

total_loss /= len(data_loader)

trainLoss.append(trainLoss.append(loss.data.cpu().detach().numpy()))

plt.plot(trainLoss)

plt.xlabel('Epochs')

# naming the y axis

plt.ylabel('Loss')

# giving a title to my graph

plt.title('Training loss')

plt.show()

print('epoch [{}/{}], loss:{:.4f}'

.format(epoch+1, num_epochs, total_loss))

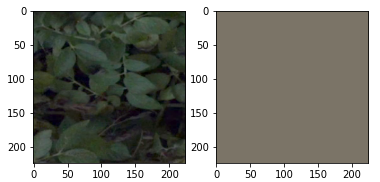

The original image and image generated are

Where is the problem. Why I am not able to generate it?