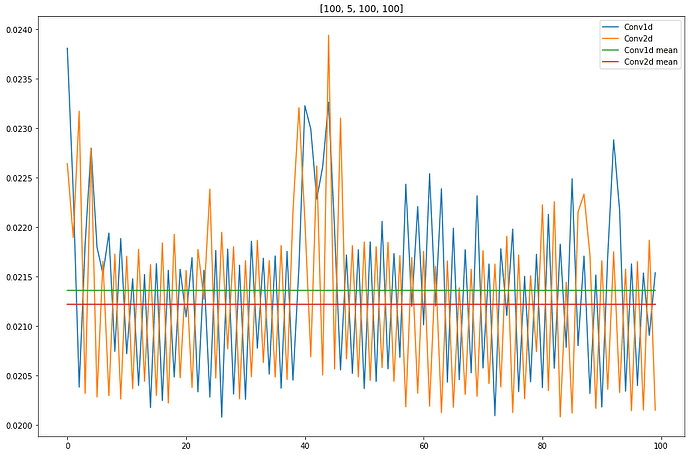

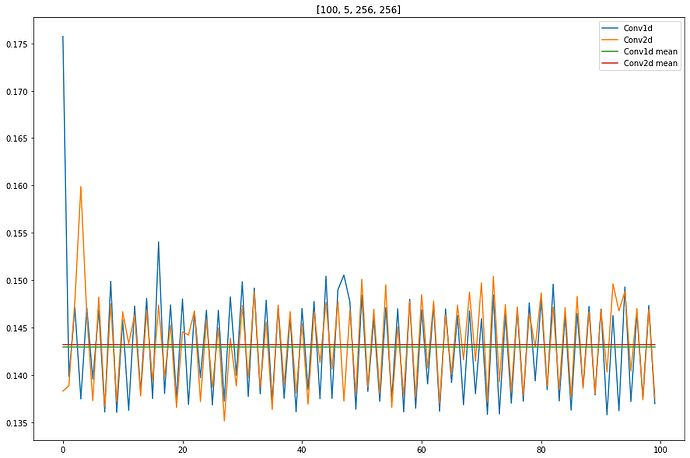

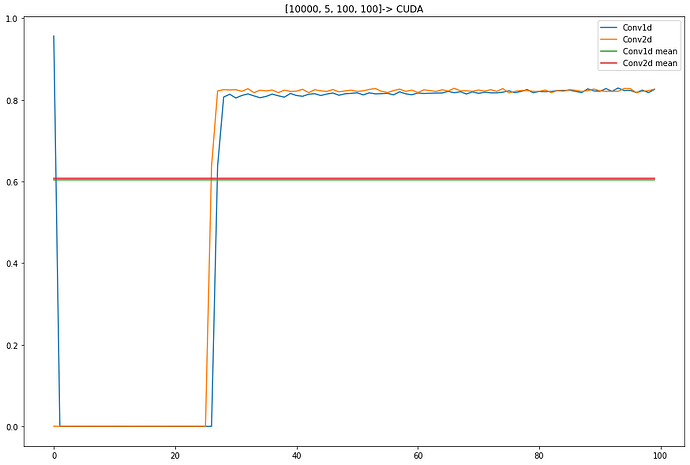

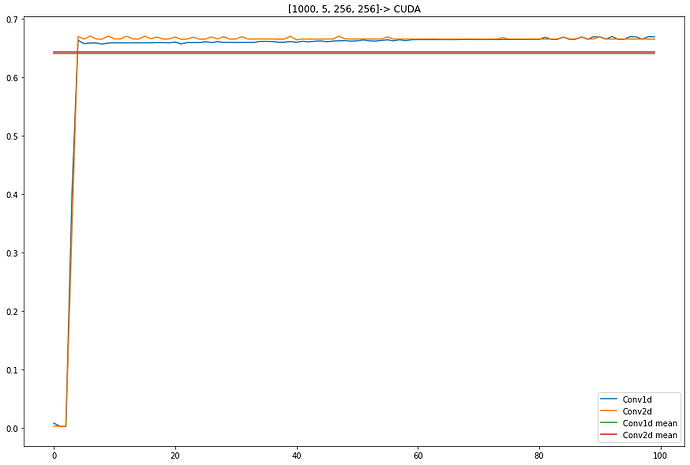

I think so, I ran few experiments (as I could not interpret more from source code) and it seems I was correct about _ConvNd idea. Here is some images of my experiments, although there might be wrong or not adequate but based on source code in previous post, I think it is true.

Note that because of power limitations, I have used same config for both Conv1d and Conv2d and only changed input size to have bigger dimensions which you can as the title of each graph. Although, in case of cuda, I increased batch size to get more reliable values.

Results on CPU:

###############

Results on GPU:

What I found is that in small tensors, Conv2d works faster but in bigger tensors they both do the same but Conv2d is always a little bit faster.

I hope it helps