Hi Guys,

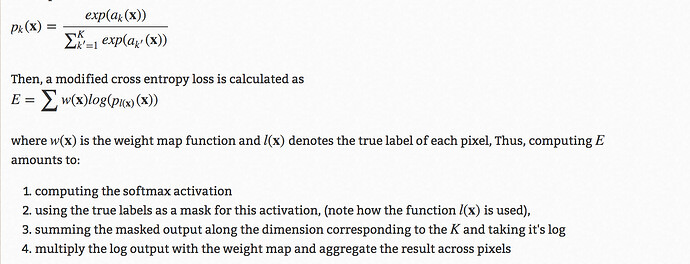

There is a famous trick in u-net architecture to use custom weight maps to increase accuracy.Below are the details of it-

Now, by asking here and at multiple other place, I get to know about 2 approaches.I want to know which one is correct or is there any other right approach which is more correct?

1)First is to use torch.nn.Functional method in the training loop-

loss = torch.nn.functional.cross_entropy(output, target, w)

where w will be the calculated custom weight.

2)Second is to use reduction='none' in the calling of loss function outside the training loop

criterion = torch.nn.CrossEntropy(reduction='none')

and then in the training loop multiplying with the custom weight-

gt # Ground truth, format torch.long

pd # Network output

W # per-element weighting based on the distance map from UNet

loss = criterion(pd, gt)

loss = W*loss # Ensure that weights are scaled appropriately

loss = torch.sum(loss.flatten(start_dim=1), axis=0) # Sums the loss per image

loss = torch.mean(loss) # Average across a batch

What’s the right way to do things in PyTorch in this case