Problem

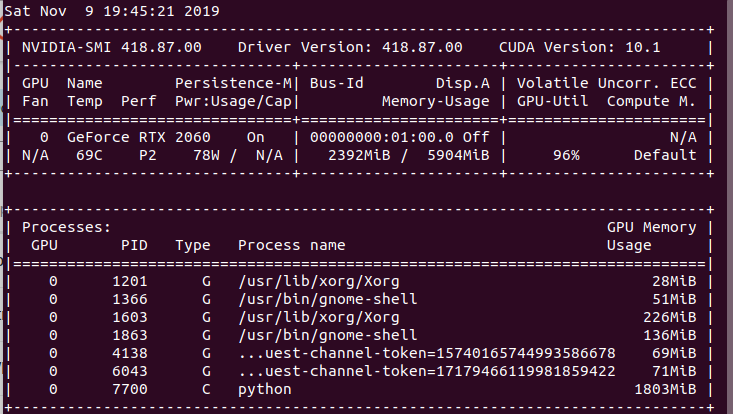

As could be seen from the following snapshot, there are two things

- When I use less than half of the GPU memory (2392 vs. 5904). The Volatile GPU-Util is almost 100%.

- The maximum batch size I could have is 128. If I made it larger (like 256). My code could not run because of following error

RuntimeError: CUDA out of memory. Tried to allocate 98.00 MiB (GPU 0; 5.77 GiB total capacity; 4.11 GiB already allocated; 18.81 MiB free; 395.30 MiB cached)

So my question is

- Why it is 100% when I just use half of the GPU memory.

- How to make most of my GPU so that I could faster training and (perhaps) better training results.

Thank you in advance!