I have run into the exact problem as you did, and I end up solving it after I thoroughly figured it out.

Though you might not need my help anymore, I think I’d better write it down so that people running into the same problem in the future can use my answer.

I’m gonna put the solution at the top, and then explain why this “loss not decreasing” error occurs so often and what it actually is later in my post. If you just want the solution, just check the following few lines.

SOLUTIONS:

- Check if you pass the softmax into the CrossEntropy loss. If you do, correct it. For more information, check @rasbt’s answer above.

- Use a smaller learning rate in the optimizer, or add a learning rate scheduler which will decrease the learning rate automatically during training.

- Use SGD optimizer instead of Adam.

EXPLANATION:

In the above piece of code, my when I print my loss it does not decrease at all. It always stays the

same equal to 2.30

epoch 0 loss = 2.308579206466675

epoch 1 loss = 2.297269344329834

epoch 2 loss = 2.3083386421203613

epoch 3 loss = 2.3027005195617676

epoch 4 loss = 2.304455518722534

epoch 5 loss = 2.305694341659546

…

What is this error? Why did the loss stop decreasing and go slightly up and down in a small range?

It is not difficult to understand that the model has stuck in a local minima, which is a pretty poor one, since the performance of the model is basically equal to guessing randomly (actually, precisely equal to guessing randomly). Also, it’s a common one, since so many people have run into the same minima as you and I did.

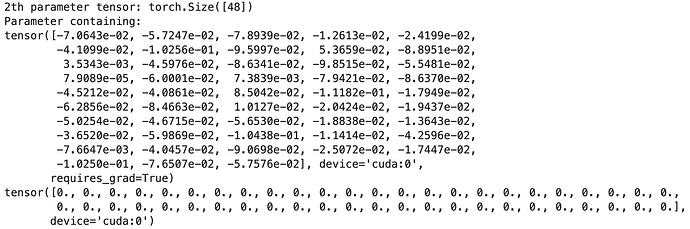

So I check the parameters of a model that has been stuck in the minima, turns out that they are almost all negative, and their derivatives are all zero!

for i, para in enumerate(model.parameters()):

print(f'{i + 1}th parameter tensor:', para.shape)

print(para)

print(para.grad)

What’s actually happening: negative parameters and ReLU activation, altogether cause the outputs of the middle layers to be all zeros, which means the parameters’ gradients become zero too (think about the chain rule). That’s why the parameters stop upgrading and the output is just a random guess. In this case, the bias of the last fully connected layers is the only parameter tensor that could have a “normal” gradient (which means not all zeros), so it gets updated every batch, causing the small ups and downs.

Therefore, one of the main solutions to this problem is to use a smaller learning rate so that our parameters don’t easily end up in such an “extreme” or “remote” area in the parameters space.