Hello every one. I doing cs231n 's last assignment: Self supervised learning and have a trouble:

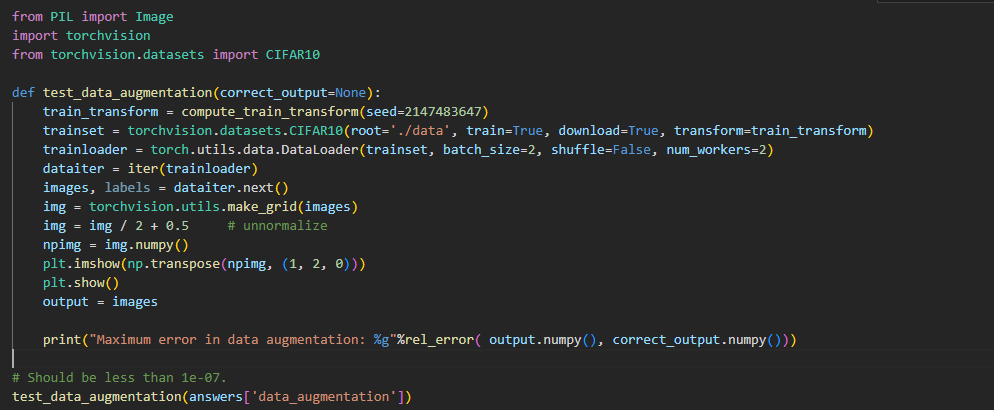

Here is the code:

The error is:

…

return F.normalize(tensor, self.mean, self.std, self.inplace)

File “c:\Users\Admin\Anaconda3\lib\site-packages\torchvision\transforms\functional.py”, line 363, in normalize

tensor.sub_(mean).div_(std)

RuntimeError: output with shape [1, 32, 32] doesn’t match the broadcast shape [3, 32, 32]

…

I know this issue relates to the transform: here is the transform (also the required solution of assignment so I dont want to change it)

…

def compute_train_transform(seed=123456):

“”"

This function returns a composition of data augmentations to a single training image.

Complete the following lines. Hint: look at available functions in torchvision.transforms

“”"

torch.random.seed(seed)

random.manual_seed(seed)

# Transformation that applies color jitter with brightness=0.4, contrast=0.4, saturation=0.4, and hue=0.1

color_jitter = transforms.ColorJitter(0.4, 0.4, 0.4, 0.1)

train_transform = transforms.Compose ([

# Step 1: Randomly resize and crop to 32x32.

transforms.RandomResizedCrop(32),

# Step 2: Horizontally flip the image with probability 0.5

transforms.RandomHorizontalFlip(p=0.5),

# Step 3: With a probability of 0.8, apply color jitter (you can use “color_jitter” defined above.

transforms.RandomApply(torch.nn.ModuleList([color_jitter]), p=0.8),

# Step 4: With a probability of 0.2, convert the image to grayscale

transforms.RandomApply(torch.nn.ModuleList([transforms.Grayscale()]), p=0.2),

transforms.ToTensor(),

transforms.Normalize([0.4914, 0.4822, 0.4465], [0.2023, 0.1994, 0.2010])])

return train_transform

…

I dont have this issue before. My current version is:

The torch version is 1.11.0

and torchvision version is 0.12.0

Could any one know how to solve this issue?

Thank you very much