In the last two days, I have often encountered CUDA error when loading the pytorch model: out of memory.

I didn’t change any code, but the error just come from nowhere.

It can work just one hour ago.

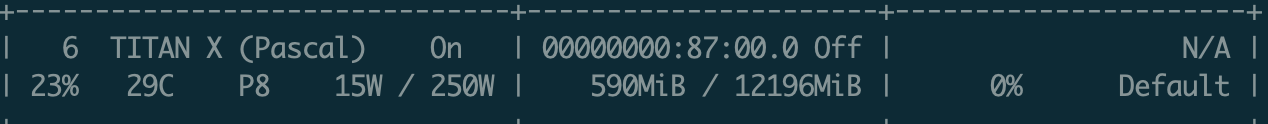

I use nvidia-smi to check the GPU also seems fine.

Traceback (most recent call last):

File "test.py", line 39, in <module>

top_model.load_state_dict(torch.load('pkls/True_fuck_feature_top_model_basic_loss_loss_10.pkl'))

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/serialization.py", line 367, in load

return _load(f, map_location, pickle_module)

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/serialization.py", line 538, in _load

result = unpickler.load()

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/serialization.py", line 504, in persistent_load

data_type(size), location)

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/serialization.py", line 113, in default_restore_location

result = fn(storage, location)

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/serialization.py", line 95, in _cuda_deserialize

return obj.cuda(device)

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/_utils.py", line 76, in _cuda

return new_type(self.size()).copy_(self, non_blocking)

File "/home1/cbx/anaconda3/envs/torch_1.0/lib/python3.7/site-packages/torch/cuda/__init__.py", line 496, in _lazy_new

return super(_CudaBase, cls).__new__(cls, *args, **kwargs)

RuntimeError: CUDA error: out of memory