Dear All,

I am trying to understand what am I doing wrong. I have several multi label data sets, all of which have the same structure on the local file system, which is compatible with torch’ .e.g.:

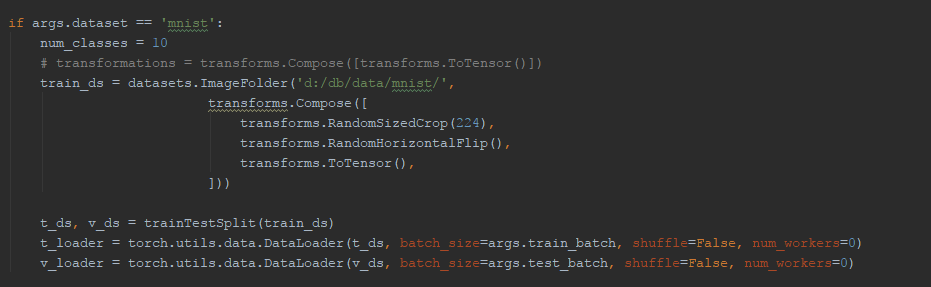

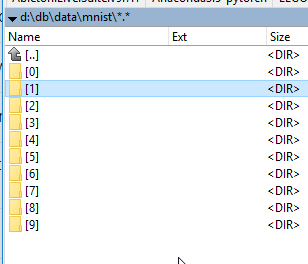

For the Mnist data set which has 10 different labels and hance 10 folders:

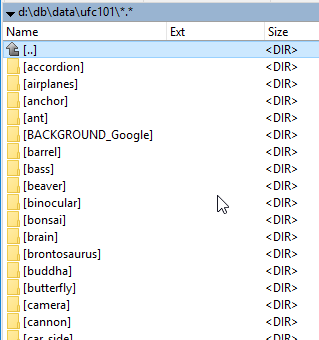

For the ucf101 data set which has 102 different labels and hence 102 folders:

For reference the code for this post is here:

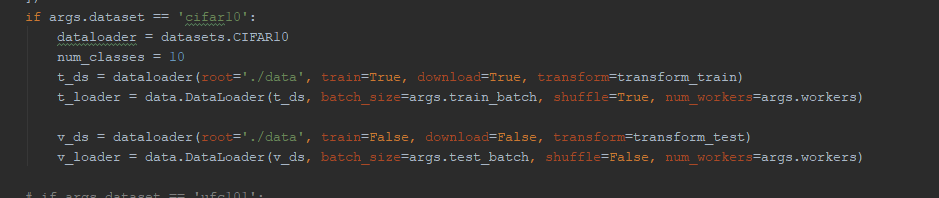

When using the code on CIFAR 10, which is downloaded automatically, e.g.:

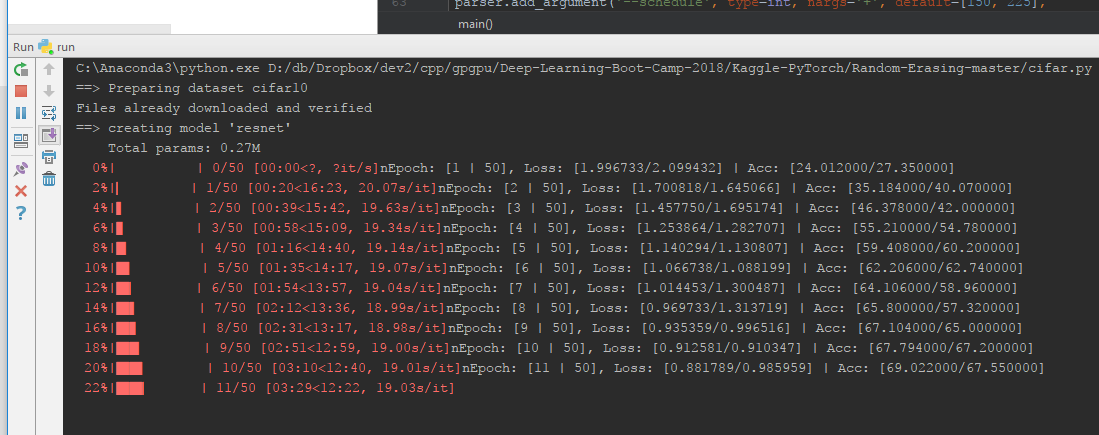

Learning works great and both loss and accuracy get better over time.

However for Mnist/ucf101/ any other data set which I store locally, learning does not happen at all.

Questions:

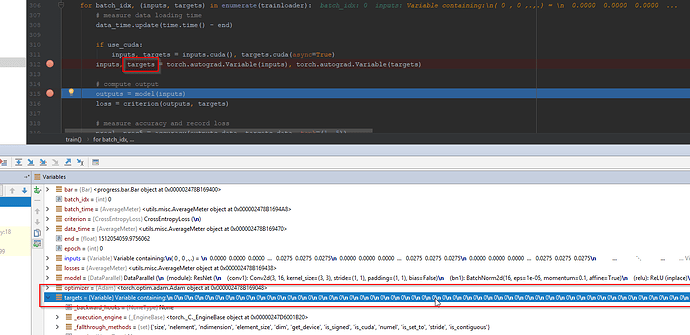

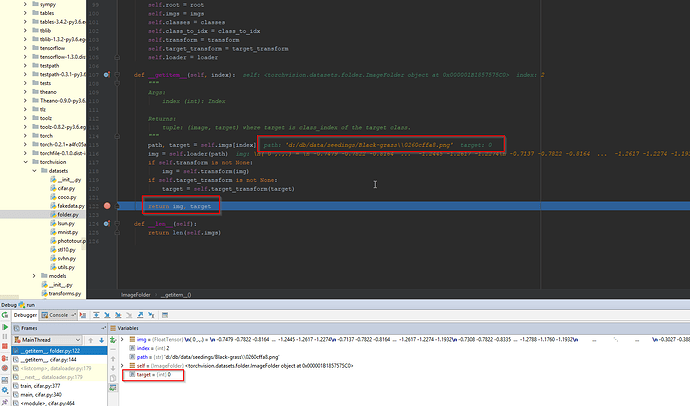

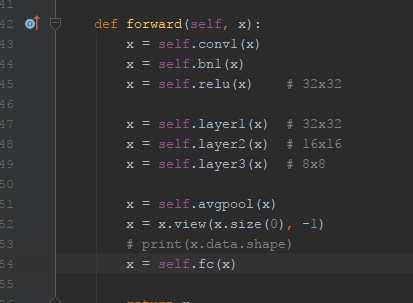

- Is it true that the default torch ImageLoader takes care of the labels for the classification without the need to one hot encode the labels? I am using CrossEntropyLoss and returning FC in forward:

- In case I am doing it right, where is my mistake in the code? Why CIFAR works well? while local data sets which confirm to the multi label folder convension do not work?

Many thanks,