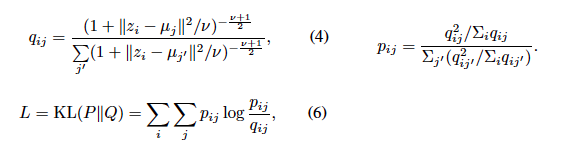

I want to implement this loss function to evaluate my network. Can some suggest a way on how to implement this.

I tried this

Cluster_Assignment_Hardening_Loss(torch.nn.Module):

def __init__(self):

super(Cluster_Assignment_Hardening_Loss,self).__init__()

def forward(self,encode_output, centroids):

...........

Can someone suggest some clean way to implement this ?

I am running into errors like

RuntimeError: leaf variable has been moved into the graph interior.