def train(model, train_dataset):

print("Starting training ........")

dataloader = DataLoader(train_dataset, batch_size=8)

train_loss = 0.0

batch_count = 0

model.train()

loading = time.time()

for batch in dataloader:

print("data loader time :", time.time() - loading)

measure = time.time()

optimizer.zero_grad()

this is the part of my train function.

The problem is : ‘for batch in dataloader’ this line takes much more time than I expected

(It took 15 seconds)

Batch size is only 8.

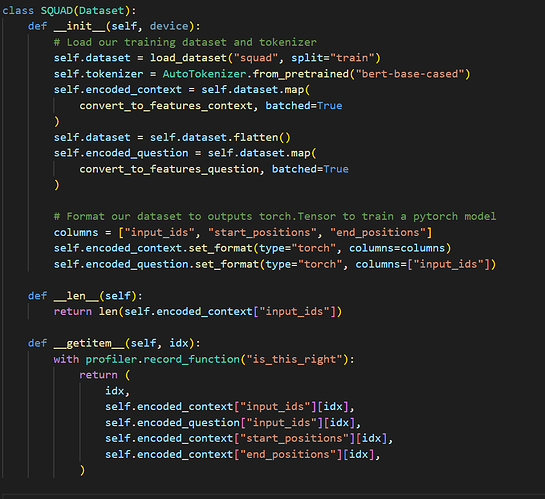

this image is my custom dataset class.

I don’t think getitem function in the custom dataset does many things.

self.encoded_context[“input_ids”][idx], self.encoded_question[“input_ids”][idx], self.encoded_context[“start_positions”][idx] and self.encoded_context[“end_positions”][idx]

have (batch=8, 200), (batch=8, 120), (batch=8, 1), (batch=8, 1) shapes respectively.

In addition, tensors that getitem function returns don’t look that big…

I thought that the init function in the custom dataset would do like preprocessing, so getitem would not take that long.

Due to the spending 15 seconds making batch from dataloader, I don’t think I use GPU efficiently, and it seems to cause a bottleneck

How can I solve this??