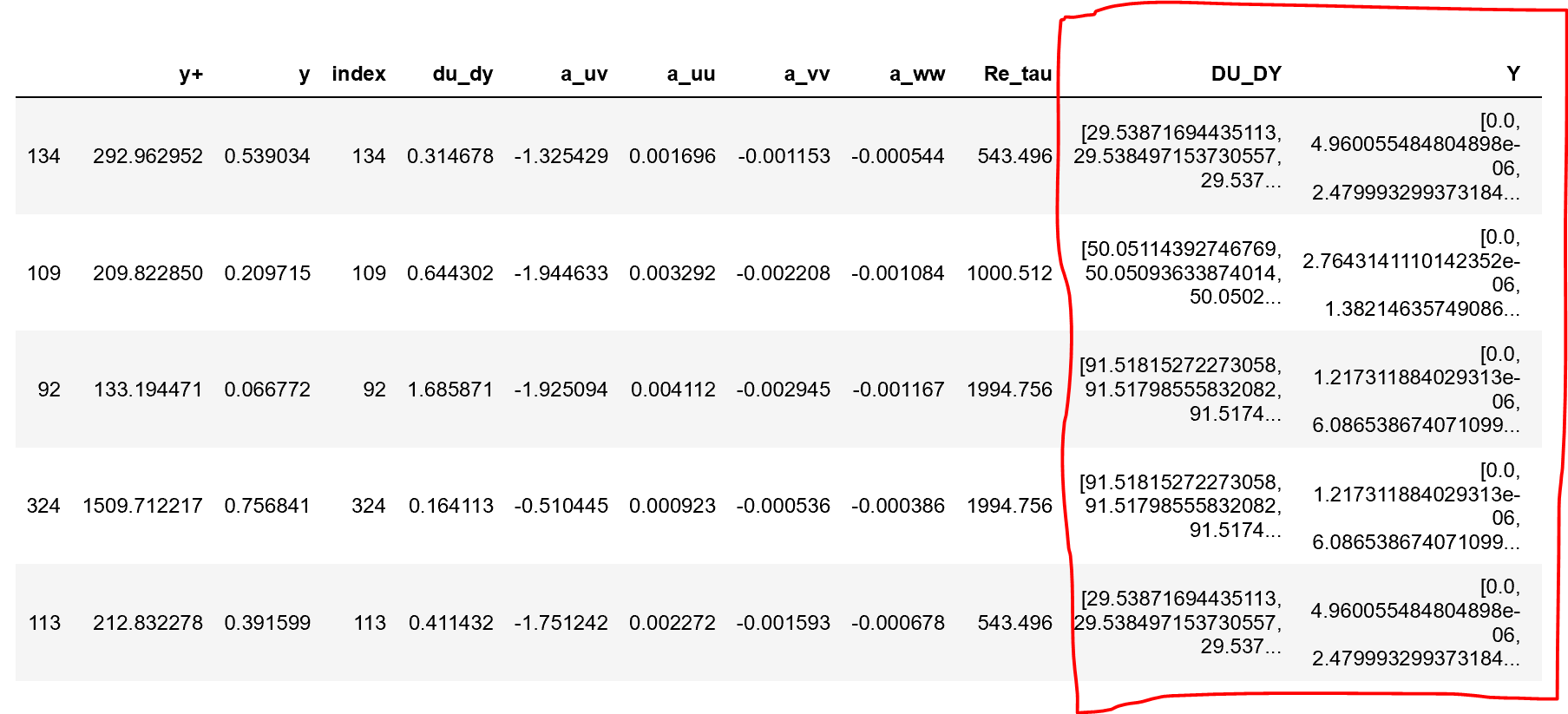

print(self.inputs) has a shape of (665, 5) and the following structure:

[[tensor([292.934]) tensor([134], dtype=torch.int32)

tensor([1.1, 1.2, 1.3, 1.4, ..., 1.153]) # DU_DY, size of 153

tensor([2.1, 2.2, 2.3, 2.4, ..., 2.153]) # Y, size of 153

tensor([550.123])] # this Re_tau input determines size for DU_DY & Y

[tensor([50.433]) tensor([97], dtype=torch.int32)

tensor([1.1, 1.2, 1.3, 1.4, ..., 1.256]) # DU_DY, size of 256

tensor([2.1, 2.2, 2.3, 2.4, ..., 2.256]) # Y, size of 256

tensor([1000.23])] # Re_tau input determines size for DU_DY & Y

[another training data with different DU_DY & Y size]

...

[another training data with different DU_DY & Y size]]

print(self.target) has a shape of (665, 1) this structure:

[[tensor([-1.3254])]

[tensor([-1.9446])]

...

[tensor( [-1.4099])]

[tensor( [-1.4342])]

[tensor( [0.])]]

Before getting rid of the list() in class ChannelDataset: element in dataset ds_train has the same list format as ds_train[0]:

([tensor([292.934]), tensor([134], dtype=torch.int32), tensor([1.1, 1.2, 1.3, 1.4, ..., 1.153]), tensor([2.1, 2.2, 2.3, 2.4, ..., 2.153]), tensor([550.123])], tensor([-1.3254]))

If I get rid of the list() in ChannelDataset(), the error message upon execution is

TypeError Traceback (most recent call last)

<ipython-input-4-4e3f094c5224> in <module>

35

36 '''Fit model'''

---> 37 trainer.fit(df_train, df_val, df_test, batch_size=3, print_freq=1, max_epochs=1, earlystopping=False, patience=30)

38

39 scores = {'Train': evaluate(trainer, df_train),

~\Desktop\IACS\Code\Demo\utils_train.py in fit(self, df_train, df_val, df_test, batch_size, print_freq, max_epochs, min_epochs, earlystopping, patience)

246

247 # Loop over trainloader

--> 248 losses, nums = zip(*[self._step(data_batch, self._optimizer) for data_batch in trainloader])

249 train_loss = np.sum(np.multiply(losses, nums)) / np.sum(nums)

250

~\Desktop\IACS\Code\Demo\utils_train.py in <listcomp>(.0)

246

247 # Loop over trainloader

--> 248 losses, nums = zip(*[self._step(data_batch, self._optimizer) for data_batch in trainloader])

249 train_loss = np.sum(np.multiply(losses, nums)) / np.sum(nums)

250

~\Anaconda3\lib\site-packages\torch\utils\data\dataloader.py in __next__(self)

343

344 def __next__(self):

--> 345 data = self._next_data()

346 self._num_yielded += 1

347 if self._dataset_kind == _DatasetKind.Iterable and \

~\Anaconda3\lib\site-packages\torch\utils\data\dataloader.py in _next_data(self)

383 def _next_data(self):

384 index = self._next_index() # may raise StopIteration

--> 385 data = self._dataset_fetcher.fetch(index) # may raise StopIteration

386 if self._pin_memory:

387 data = _utils.pin_memory.pin_memory(data)

~\Anaconda3\lib\site-packages\torch\utils\data\_utils\fetch.py in fetch(self, possibly_batched_index)

45 else:

46 data = self.dataset[possibly_batched_index]

---> 47 return self.collate_fn(data)

~\Desktop\IACS\Code\Demo\utils_train.py in my_collate(batch)

198

199 def my_collate(batch):

--> 200 output = [(torch.tensor(dp[0]), torch.tensor(dp[1])) for dp in batch]

201

202 return output

~\Desktop\IACS\Code\Demo\utils_train.py in <listcomp>(.0)

198

199 def my_collate(batch):

--> 200 output = [(torch.tensor(dp[0]), torch.tensor(dp[1])) for dp in batch]

201

202 return output

TypeError: can't convert np.ndarray of type numpy.object_. The only supported types are: float64, float32, float16, int64, int32, int16, int8, uint8, and bool.

I guess now the ds_train is something like

[(array([tensor([292.934]), tensor([134], dtype=torch.int32), tensor([1.1, 1.2, 1.3, 1.4, ..., 1.153]), tensor([2.1, 2.2, 2.3, 2.4, ..., 2.153]), tensor([550.123])], dtype=object), tensor([-1.3254])),

(array(...)), ...]

I’m not too sure how to fix the dtype=object issue.