Thank you for your reply.

this is the part of the code that created the train_loader variable:

train_loader = data.DataLoader(

__dataset__[args.dataset](

config["train_dataset"],

seed=config["seed"],

is_train=True,

multi_scale_pred=args.multi_scale_pred,

),

batch_size=config["train_batch_size"],

num_workers=8,

shuffle=True,

pin_memory=False,

)

As you can see batch size is set by a parameter inside a json file. The number of images is 4000 (more or less) and the number of batch is 16.

Here is the part of the json file that provides this kind of info to the code:

"seed": 7,

"task1_classes": 2,

"task2_classes": 37,

"task1_weight": 1,

"task2_weight": 1,

"train_batch_size": 16,

"val_batch_size": 4,

"refinement":3,

"train_dataset": {

# we are not interested in the spacenet dataset

"spacenet":{

"dir": "/data/spacenet/train_crops/",

"file": "/data/spacenet/train_crops.txt",

"image_suffix": ".png",

"gt_suffix": ".png",

"crop_size": 256

},

"deepglobe":{

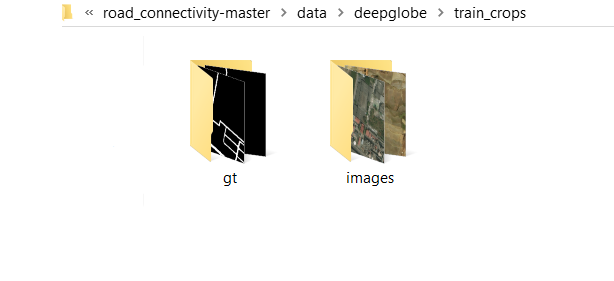

"dir": "C:/Users/.../road_connectivity-master/data/deepglobe/train_crops/",

"file": "C:/Users/.../road_connectivity-master/data/deepglobe/train_crops.txt",

"image_suffix": ".jpg",

"gt_suffix": ".png",

"crop_size": 256

},

"crop_size": 256,

"augmentation": true,

"mean" : "[70.95016901, 71.16398124, 71.30953645]",

"std" : "[ 34.00087859, 35.18201658, 36.40463264]",

"normalize_type": "Mean",

"thresh": 0.76,

"angle_theta": 10,

"angle_bin": 10

},

I am not an experienced developer, so please excuse me if I am missing something obvious here.