Hi there, I was stuck and found something very very abnormal. I am training an image classifier

def train(self, current_epoch=0, is_init=False, fold=0):

self.logger.info('Current fold: %d' %(fold))

self.model.train()

if (not is_init):

self.scheduler.step()

self.logger.info('Current epoch learning rate: %e' %(self.optimizer.param_groups[0]['lr']))

running_loss = 0.0

running_acc = 0.0

dataloader = self.dataloader[str(fold)]

optimizer = torch.optim.Adam(self.model.parameters(), lr=0.0001, weight_decay=0.0001)

self.logger.info('Len of train loader: '+str(len(dataloader['train'])))

for i, sample in enumerate(dataloader['train'], 1):

self.logger.info("i: {}".format(i))

sample = self.prepare(sample)

images, labels = sample['img'], sample['label']

optimizer.zero_grad()

outputs = self.model(images)

loss = self.loss_fn(outputs, labels)

loss.backward()

optimizer.step()

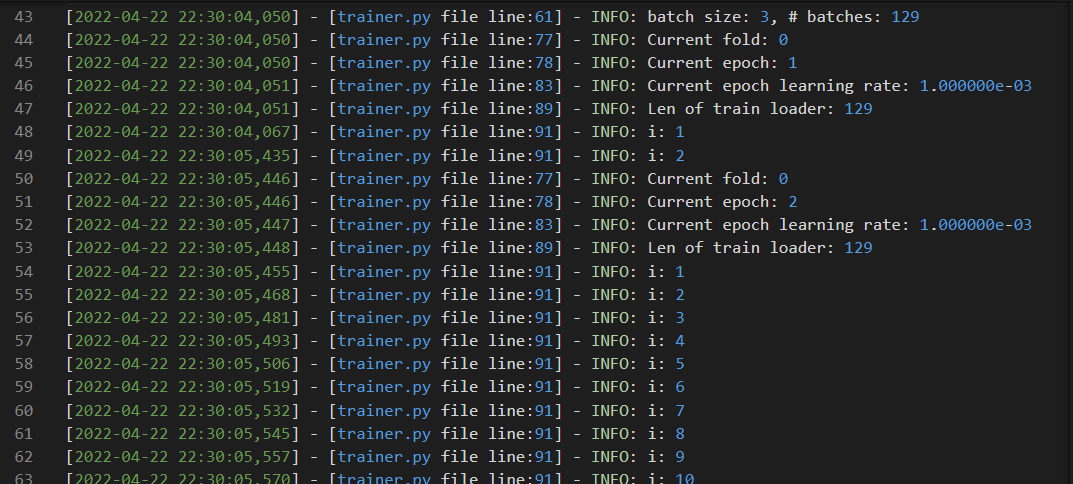

I found that the output log very strange.

As shown in the picture above, we can see that the first epoch only gets 2 batches, while the second one gets far more than that. In fact, it gets 12 and epoch 3 gets 15 batches.

Shouldn’t it be the same number of batches? Why are they not 129 as len(dataloader) is 129?

You may find the entire project here. Project

Sincerely thank you in advance. So frustrating…