Hi @piojanu @ptrblck ,

First of all - I would like to thank you for the great research and code. It has increased my training speed by an order of magnitude!

I have used the HDF5 Dataset with a single hdf5 file and it worked great.

However, when I used it together with the torch.utils.data.dataset.ConcatDataset, it has slowed down by significantly. Do you have any idea why this should happen?

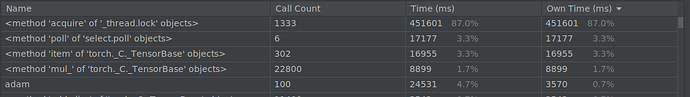

If it helps, here is the time profiling of my training. The acquiring of threads takes up most of the time. I have very little knowledge of threading, so this doesn’t make too much sense to me. I have 6 workers, so maybe the number 87% has to do with 5/6~83%? I’m just trying to guess here ![]()

After looking this up, I saw a post that suggested to use

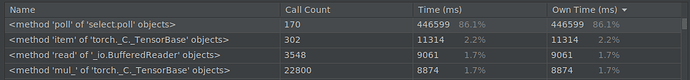

pin_memory=False. This does not speed up anything, but rather passes the bottleneck elsewhere:Docs:

https://pytorch.org/docs/stable/_modules/torch/utils/data/dataset.html#ConcatDataset

Suggestion to use

pin_memory=False: