Hello,

I am getting this problem when using DataParallel I can not access my LSTM internal weights. The following picture exemplifies the error.

Thanks in advance for your help!

Regards,

André

Hello,

I am getting this problem when using DataParallel I can not access my LSTM internal weights. The following picture exemplifies the error.

Thanks in advance for your help!

Regards,

André

If you are using nn.DataParallel the model will be wrapped into the .module attribute so you might need to access the parameter via model.module.layer.weight.

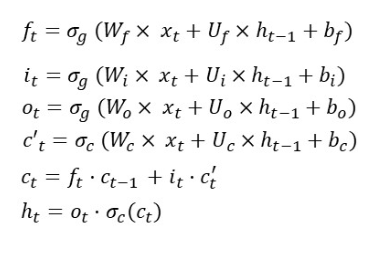

The LSTM formulas are the following:

However, if I get the LSTM internal weights and bias, for the first expression, the matrices sizes do not match.

Wi_i is [450 by 2]

Wh_i is [450 by 450]

bi_i is [450 by 1]

bh_i is [450 by 1]

x = [5000 by 3 by 2]

h = [1 by 5000 by 450]

How is it possible to perform the i_t equation for example?

Thanks for the help!

The shape of the parameters is described in the docs as e.g.:

~LSTM.weight_ih_l[k] – the learnable input-hidden weights of the kth layer (W_ii|W_if|W_ig|W_io), of shape (4*hidden_size, input_size) for k = 0…

so they are in a packed format.