Hi there @albanD, @Yuzhou_Song

I noticed there is an small mistake with the code you provided:

It’s necessary to unsqueeze loss inside forward pass to DataParallel were able to build loss back. Loss provided by PyTorch loss functions seems not to have dimensions, and DataParallel mount batch back in dim 0 by default. It also requires to class super class not to raise up with an error. Anyway thank you very much. Awesome help.

class FullModel(nn.Module):

def __init__(self, model, loss):

super(FullModel, self).__init__()

self.model = model

self.loss = loss

def forward(self, targets, *inputs):

outputs = self.model(*inputs)

loss = self.loss(outputs, targets)

return torch.unsqueeze(loss,0),outputs

def DataParallel_withLoss(model,loss,**kwargs):

model=FullModel(model, loss)

if 'device_ids' in kwargs.keys():

device_ids=kwargs['device_ids']

else:

device_ids=None

if 'output_device' in kwargs.keys():

output_device=kwargs['output_device']

else:

output_device=None

if 'cuda' in kwargs.keys():

cudaID=kwargs['cuda']

model=torch.nn.DataParallel(model, device_ids=device_ids, output_device=output_device).cuda(cudaID)

else:

model=torch.nn.DataParallel(model, device_ids=device_ids, output_device=output_device).cuda()

return model

I was trying this implementation and it works.

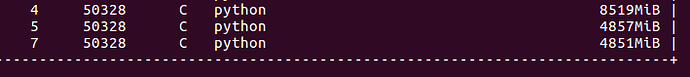

However imbalance keeps happening

So, as far as i could check printing parameter.device model weights are stored in gpu by-default gpu. In the case I run, we can roughly say each mini-batch requires ~5Gb and model weights ~4Gb (8500-4500)

There is a gain in computing loss in a distributed way.

Is not possible to share model weights among all gpus? Cos in this example, i can increase batch size until gpu uses 12Gb, but it would mean gpu1 and gpu2 would be using only 8.

Even a worse case, if i were using Adam (you mentioned it copies all the model weigths), memory usage in GPU0 would be 12 Gb meanwhile gpu1 and gpu2 would be using 4.

If this were not possible due to pytorch requirements (I guess pytorch requires all tensors to be in the same gpu to be able to operate them)

Is there are way of penalizing workload, this is, an imbalanced amount of samples per gpu to reduce by-default gpu memory requirements?

Toy example

BS=30

gpu0–>4

gpu1–>13

gpu2–>13