Hi, I am implementing this DCGAN tutorial: DCGAN Tutorial — PyTorch Tutorials 2.2.0+cu121 documentation on two different datasets but my generator loss is not converging.

I am training the model for 75 epochs and I am calculating the average loss for each epoch by :

average loss per epoch = sum of losses for epoch / total number of iterations in that epoch

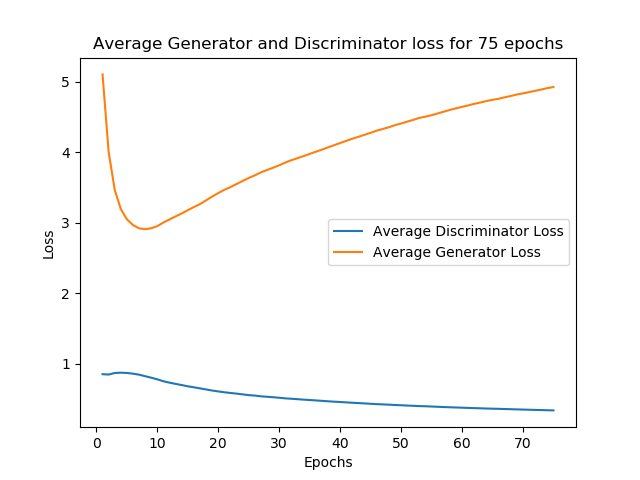

My final graph looks like this:

How can I fix the generator loss behaviour? I tried changing the learning rate to different values but it didnt work. Thanks for the help!