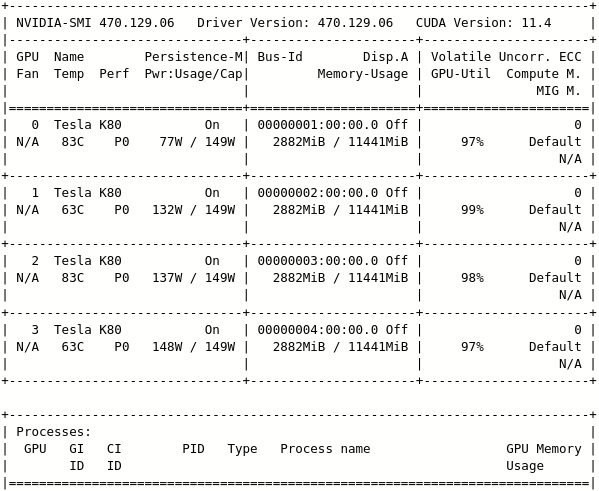

Is there a solution to this error than reduce the batch size? on a single machine with DataParallel I can run this with 32 but here in DDP with azure cluster I get memory allocation failed error in NCCL on one of the GPUs from the 16 GPUs.

2023/05/16 19:24:26 WARNING mlflow.tracking.fluent: Exception raised while enabling autologging for sklearn: No module named 'sklearn.utils.testing'

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NCCL_SOCKET_IFNAME set by environment to eth0

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO Bootstrap : Using eth0:10.0.0.6<0>

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO Plugin Path : /opt/hpcx/nccl_rdma_sharp_plugin/lib/libnccl-net.so

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO P2P plugin IBext

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NCCL_IB_PCI_RELAXED_ORDERING set by environment to 1.

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NCCL_SOCKET_IFNAME set by environment to eth0

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NET/IB : No device found.

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NCCL_IB_DISABLE set by environment to 1.

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NCCL_SOCKET_IFNAME set by environment to eth0

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO NET/Socket : Using [0]eth0:10.0.0.6<0>

696b7901f9044f23b786795c0ef7257b000002:48:48 [3] NCCL INFO Using network Socket

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0001-0000-3130-444531303244/pci0001:00/0001:00:00.0/../max_link_speed, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0001-0000-3130-444531303244/pci0001:00/0001:00:00.0/../max_link_width, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0002-0000-3130-444531303244/pci0002:00/0002:00:00.0/../max_link_speed, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0002-0000-3130-444531303244/pci0002:00/0002:00:00.0/../max_link_width, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0003-0000-3130-444531303244/pci0003:00/0003:00:00.0/../max_link_speed, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0003-0000-3130-444531303244/pci0003:00/0003:00:00.0/../max_link_width, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0004-0000-3130-444531303244/pci0004:00/0004:00:00.0/../max_link_speed, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0004-0000-3130-444531303244/pci0004:00/0004:00:00.0/../max_link_width, ignoring

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Topology detection: network path /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/000d3a02-435a-000d-3a02-435a000d3a02 is not a PCI device (vmbus). Attaching to first CPU

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Attribute coll of node net not found

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO === System : maxWidth 5.0 totalWidth 12.0 ===

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO CPU/0 (1/1/1)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + PCI[5000.0] - NIC/0

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + NET[5.0] - NET/0 (0/0/5.000000)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + PCI[12.0] - GPU/100000 (8)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + PCI[12.0] - GPU/200000 (9)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + PCI[12.0] - GPU/300000 (10)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO + PCI[12.0] - GPU/400000 (11)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO ==========================================

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO GPU/100000 :GPU/100000 (0/5000.000000/LOC) GPU/200000 (2/12.000000/PHB) GPU/300000 (2/12.000000/PHB) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO GPU/200000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (0/5000.000000/LOC) GPU/300000 (2/12.000000/PHB) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO GPU/300000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (2/12.000000/PHB) GPU/300000 (0/5000.000000/LOC) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO GPU/400000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (2/12.000000/PHB) GPU/300000 (2/12.000000/PHB) GPU/400000 (0/5000.000000/LOC) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO NET/0 :GPU/100000 (3/5.000000/PHB) GPU/200000 (3/5.000000/PHB) GPU/300000 (3/5.000000/PHB) GPU/400000 (3/5.000000/PHB) CPU/0 (2/5.000000/PHB) NET/0 (0/5000.000000/LOC)

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Pattern 4, crossNic 0, nChannels 1, speed 5.000000/5.000000, type PHB/PHB, sameChannels 1

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO 0 : NET/0 GPU/8 GPU/9 GPU/10 GPU/11 NET/0

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Pattern 1, crossNic 0, nChannels 1, speed 6.000000/5.000000, type PHB/PHB, sameChannels 1

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO 0 : NET/0 GPU/8 GPU/9 GPU/10 GPU/11 NET/0

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Pattern 3, crossNic 0, nChannels 0, speed 0.000000/0.000000, type NVL/PIX, sameChannels 1

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Ring 00 : 10 -> 11 -> 12

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Ring 01 : 10 -> 11 -> 12

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Trees [0] -1/-1/-1->11->10 [1] -1/-1/-1->11->10

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Setting affinity for GPU 3 to 0fff

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Channel 00 : 11[400000] -> 12[100000] [send] via NET/Socket/0

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Channel 01 : 11[400000] -> 12[100000] [send] via NET/Socket/0

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Connected all rings

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Channel 00 : 11[400000] -> 10[300000] via direct shared memory

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Channel 01 : 11[400000] -> 10[300000] via direct shared memory

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO Connected all trees

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO threadThresholds 8/8/64 | 128/8/64 | 8/8/512

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO 2 coll channels, 2 p2p channels, 1 p2p channels per peer

696b7901f9044f23b786795c0ef7257b000002:48:298 [3] NCCL INFO comm 0x153408001240 rank 11 nranks 16 cudaDev 3 busId 400000 - Init COMPLETE

Downloading: "https://download.pytorch.org/models/vgg19-dcbb9e9d.pth" to /root/.cache/torch/hub/checkpoints/vgg19-dcbb9e9d.pth

CPython

3.8.13

uname_result(system='Linux', node='696b7901f9044f23b786795c0ef7257b000002', release='5.15.0-1029-azure', version='#36~20.04.1-Ubuntu SMP Tue Dec 6 17:00:26 UTC 2022', machine='x86_64', processor='x86_64')

training script path: /mnt/azureml/cr/j/29c1643998874ef7b6bfd57858b8c0ea/exe/wd

start: 19:24:26.410571

manual seed set to 5655

opt.checkpoints = /mnt/azureml/cr/j/29c1643998874ef7b6bfd57858b8c0ea/cap/data-capability/wd/checkpoints

world size is: 16

global rank is 11 and local_rank is 3

is_distributed is True and batch_size is 1

os.getpid() is 48 and initializing process group with {'MASTER_ADDR': '10.0.0.4', 'MASTER_PORT': '6105', 'LOCAL_RANK': '3', 'RANK': '11', 'WORLD_SIZE': '16'}

device is cuda:3

MLflow version: 1.25.1

Tracking URI: azureml:URI

Artifact URI: azureml:URI

load data

train data size: 246000

training data len: 246000

batch size is: 1

training data: 15375 batches

load models

torch.cuda.device_count(): 4

type opt.gpuids: <class 'list'>

gpuids are: [0, 1, 2, 3]

Training network pretrained on imagenet.

0%| | 0.00/548M [00:00<?, ?B/s]

1%|▏ | 7.06M/548M [00:00<00:07, 74.0MB/s]

3%|▎ | 16.9M/548M [00:00<00:06, 91.1MB/s]

8%|▊ | 44.5M/548M [00:00<00:02, 182MB/s]

13%|█▎ | 72.8M/548M [00:00<00:02, 227MB/s]

18%|█▊ | 100M/548M [00:00<00:01, 248MB/s]

23%|██▎ | 124M/548M [00:00<00:01, 236MB/s]

27%|██▋ | 150M/548M [00:00<00:01, 242MB/s]

32%|███▏ | 174M/548M [00:00<00:01, 245MB/s]

36%|███▌ | 198M/548M [00:00<00:01, 242MB/s]

41%|████▏ | 226M/548M [00:01<00:01, 259MB/s]

46%|████▌ | 252M/548M [00:01<00:01, 263MB/s]

51%|█████ | 277M/548M [00:01<00:01, 252MB/s]

55%|█████▌ | 302M/548M [00:01<00:01, 246MB/s]

59%|█████▉ | 325M/548M [00:01<00:00, 241MB/s]

64%|██████▍ | 353M/548M [00:01<00:00, 257MB/s]

69%|██████▉ | 378M/548M [00:01<00:00, 256MB/s]

73%|███████▎ | 403M/548M [00:01<00:00, 257MB/s]

78%|███████▊ | 429M/548M [00:01<00:00, 262MB/s]

83%|████████▎ | 454M/548M [00:01<00:00, 264MB/s]

88%|████████▊ | 482M/548M [00:02<00:00, 271MB/s]

93%|█████████▎| 508M/548M [00:02<00:00, 247MB/s]

97%|█████████▋| 532M/548M [00:02<00:00, 226MB/s]

100%|██████████| 548M/548M [00:02<00:00, 237MB/s]

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/socket.h:423 NCCL WARN Net : Connection closed by remote peer 10.0.0.6<54764>

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO include/socket.h:445 -> 2

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO include/socket.h:457 -> 2

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:229 -> 2

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] NCCL INFO bootstrap.cc:231 -> 1

696b7901f9044f23b786795c0ef7257b000002:48:302 [3] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 91)

I don’t see memory problem in nodes