Hello All,

I am using a network combining u-net and residual architecture for regression. The network has 14 3D Conv layers and layers are kinda in sequence as following:

CONV>BN>ReLU

From an 86x110x78 Image, I am regressing to a vector of size 512.

So the last two layers are fully connected layers.

Starting with a batchsize of 1(batchsize 4 generates CUDA out of memory error), learning rate = 0.05.

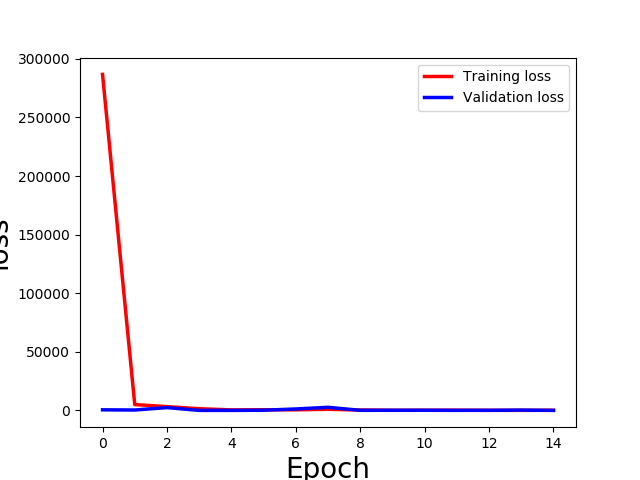

I am getting the following loss curves while training:

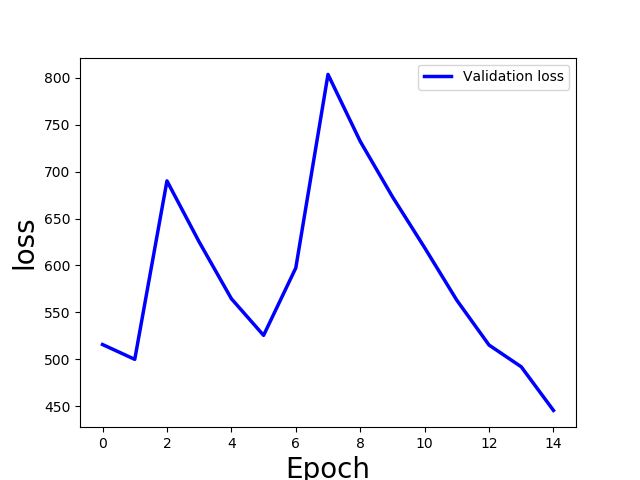

Close up look at validation loss:

Can anyone explain why the train loss has a drop like that? Is it overfitting right after the first epoch? shouldn’t the validation loss be larger at the beginning?

n.b. not using any Dropout layers…