thanks @ptrblck ! your answer is very instructive!

my work may be a liiter complex, and I do a transfer work, the results do differ when I use pre_cuda with preload data with load function, and use_cycle to make a infinite cycle batch_data, and I add the reproducibility notes some code:

np.random.seed(0)

torch.manual_seed(0)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

and this code not help me.

I do a transfer learning work, and loss.DAN() to calculate source feature and target feature a distance as a transfer loss. and add the transfer loss to training loss as a total loss to backpropogation.

I have not use a neat deep training work to test this problem, but my confuse is that why running is different, may this problem as your answered, it may cause by CUDA implement.

and my test code:

def transfer_classification():

# data config

root_source = ARGS.path_data + ARGS.field_source + "/images"

root_target = ARGS.path_data + ARGS.field_target + "/images"

transform = transform224c()

batch_size = ARGS.batch_size

# load data

data_loader_source, classes_source = get_data_loader(

root_source,

transform=transform,

batch_size=batch_size,

drop_last=True)

data_loader_target, classes_target = get_data_loader(

root_target,

transform=transform,

batch_size=batch_size,

drop_last=True)

# net

base_network, bottleneck_layer, classifier_layer = construct_net()

# deep loss

criterion = nn.CrossEntropyLoss()

# training params

parameter_list = [{"params": bottleneck_layer.parameters(), "lr": 10},

{"params": classifier_layer.parameters(), "lr": 10}]

# optimizer

optimizer = optim.SGD(parameter_list, lr=1.0, momentum=0.9, weight_decay=0.0005, nesterov=True)

param_lr_list = []

for param_group in optimizer.param_groups:

param_lr_list.append(param_group["lr"])

## train

# for test data efficient

if ARGS.pre_cuda:

data_loader_source = [[x[0].to(device), x[1].to(device)] for x in data_loader_source]

data_loader_target = [[x[0].to(device), x[1].to(device)] for x in data_loader_target]

if ARGS.use_cycle:

iter_source = cycle(data_loader_source)

iter_target = cycle(data_loader_target)

else:

len_train_source = len(data_loader_source)

len_train_target = len(data_loader_target)

for i in range(ARGS.iteration):

# optimizer

optimizer = lr_schedule.inv_lr_scheduler(

param_lr_list, optimizer, i, init_lr=0.0003, gamma=0.0003, power=0.75)

optimizer.zero_grad()

if not ARGS.use_cycle:

if i % len_train_source == 0:

iter_source = iter(data_loader_source)

if i % len_train_target == 0:

iter_target = iter(data_loader_target)

inputs_source, labels_source = next(iter_source)

inputs_target, labels_target = next(iter_target)

if not ARGS.pre_cuda:

inputs_source, labels_source = inputs_source.to(device), labels_source.to(device)

inputs_target, labels_target = inputs_target.to(device), labels_target.to(device)

inputs = torch.cat((inputs_source, inputs_target), dim=0)

features = base_network(inputs)

features = bottleneck_layer(features)

outputs = classifier_layer(features)

classifier_loss = criterion(outputs.narrow(0, 0, batch_size), labels_source)

classifier_loss_target = criterion(outputs.narrow(0, batch_size, batch_size), labels_target)

transfer_loss = loss.DAN(features.narrow(0, 0, batch_size),

features.narrow(0, batch_size, batch_size))

total_loss = classifier_loss + transfer_loss

total_loss.backward()

optimizer.step()

loss_logger.update(classifier_loss, classifier_loss_target, transfer_loss, total_loss)

if i % 100 == 0:

ts.cut()

# log loss

if i % 20 == 0: # print every NUM mini-batches

losses = loss_logger.get_avg()

logger.info("[%6d] source_loss: %.3f, target_loss: %.3f, transfer_loss: %6.3f, total_loss: %6.3f" %

(i, losses[0], losses[1], losses[2], losses[3]))

writer.add_scalars(

'data/scalar_group',

{'source': losses[0], 'target': losses[1], 'transfer': losses[2], 'total': losses[3]}, i)

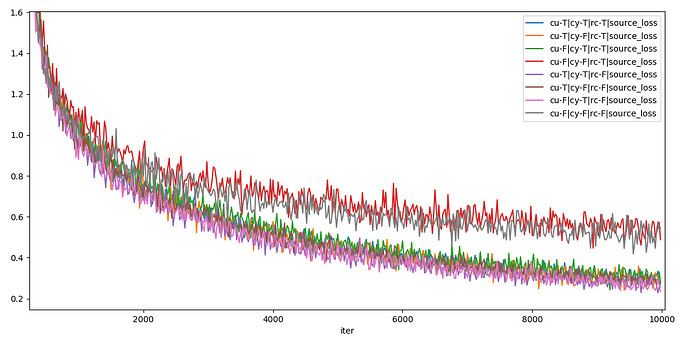

and I paste my results plot, this only contain the training loss of source data

cu: pre_cuda, cy, use_cycle, rc, NOT use random seed

and pre_cuda and use_cycle is different with others

I am not paste my tensorboard results because it occur some error, and I not find a solution.

W1111 23:44:09.107531 Reloader tf_logging.py:120] Detected out of order event.step likely caused by a TensorFlow restart. Purging 500 expired tensor events from Tensorboard display between the previous step: 9980 (timestamp: 1541875220.4483728) and current step: 0 (timestamp: 1541875229.00469).

W1111 23:44:09.107531 140580398749440 tf_logging.py:120] Detected out of order event.step likely caused by a TensorFlow restart. Purging 500 expired tensor events from Tensorboard display between the previous step: 9980 (timestamp: 1541875220.4483728) and current step: 0 (timestamp: 1541875229.00469).

W1111 23:44:09.149514 Reloader tf_logging.py:120] Detected out of order event.step likely caused by a TensorFlow restart. Purging 281 expired tensor events from Tensorboard display between the previous step: 5600 (timestamp: 1541876346.2499464) and current step: 0 (timestamp: 1541905892.754689).

and I will skip this problem to not use pre_cuda and use_cycle.

anyway, thank you for your help!