I’m using the snippet given below to measure the amount of time each batch takes to load.

st_batch= time.time()

torch.cuda.synchronize()

for cnt, batch in enumerate(train_data_loader):

torch.cuda.synchronize()

end_batch= time.time()

print(end_batch-st_batch)

# do something

st_batch=time.time()

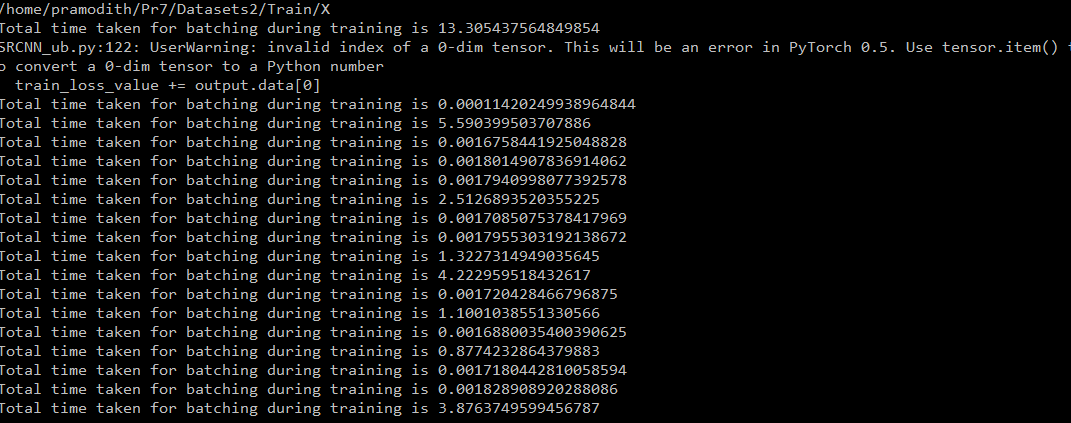

I observe that some batches take 3-5 seconds whereas others take just 0.002s

I assume this is a bottleneck while training my network is there anyway around this?