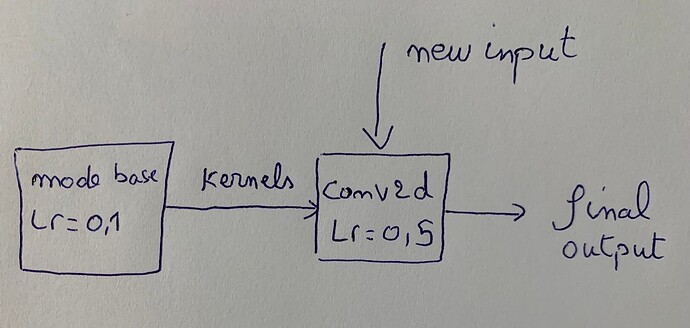

I assign a different learning rate to the last conv2d layer of my model. the model_base parameters should be learned with the default lr. the tricky part is that the output of the model base will be used as a kernel to the last cov2d layer. since this output is updated with default lr I am afraid that this same lr will be inherited to the kernels of last conv2d layer and not the assigned one. the problem is that during training there is no way to consult the lr of a given parameter. I hope the sketching below could clarify better the idea.

would the kernel inhirit the new lr assigned to the con2d layer ? ![]()

Learning rate is just a value which sets limits on how significantly should you adjust the weights during training step. It doesn’t intersect with kernel values.