I trained a model for sequence to sequence labeling purpose. After training, I saved using save_dict function. To test I load the model and its states and then turn it to eval mode using model.eval(). After that I run the same model on same set of input and get different results everytime. I am attaching code snippet and outputs for reference. Kindly help

selection_summ=SentenceSelectionSumm(emb_dim,vocab_size,conv_hidden,lstm_hidden,lstm_layer,bool(1),embedding_weights).to(device)

PATH="/group-volume/orc_srib/abhi3.singh/wikihow/model/extractor/ckpt-30000-4"

selection_summ.load_state_dict(torch.load(PATH))

selection_summ.eval()

for batch in val_loader:

b=prepro_fn(50,10,batch)

b_c=batch_converter(b)

batchify_out=batchify(b_c)

out=selection_summ.forward(batchify_out[0][0],batchify_out[0][1],batchify_out[1])

print(out.size())

print(batchify_out[1][0][1])

print(torch.round(out.squeeze())[0])

break

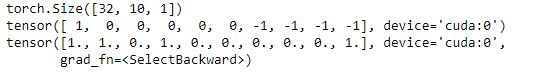

Output on 1st run

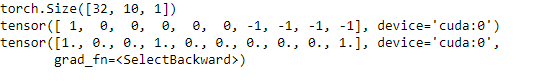

Output on 2nd run

My model function

class SentenceSelectionSumm(nn.Module):

def __init__(self,emb_dim,vocab_size,conv_hidden,lstm_hidden,lstm_layer,bidirectional,embedding,dropout=0.2):

super().__init__()

self._sent_enc=ConvSentEncoder(vocab_size,emb_dim,conv_hidden,dropout,embedding)

self.bi_lstm_enc=LSTMEncoder(3*conv_hidden,lstm_hidden,lstm_layer,dropout=dropout,bidirectional=bidirectional)

self.lin_2=nn.Linear(10,1)

self.lin_1=nn.Linear(self.bi_lstm_enc.hidden_size*(2 if self.bi_lstm_enc.bidirectional else 1),10)

def forward(self,article_sents,sent_nums,target):

enc_out=self._encode(article_sents,sent_nums)

linear_sigmoid_out=self.linear_out(enc_out)

return linear_sigmoid_out

def _encode(self,article_sents,sent_nums):

if sent_nums is None:

enc_sent=self._sent_encode(article_sents[0]).unsqueeze(0)

else:

max_n=max(sent_nums)

enc_sents=[self._sent_enc(art_sent) for art_sent in article_sents]

def zero(n,device):

z=torch.zeros(n,self.bi_lstm_enc.input_size).to(device)

return z

enc_sent=torch.stack([torch.cat([s,zero(max_n-n,s.device)],dim=0)

if n!=max_n

else s

for s,n in zip(enc_sents,sent_nums)],

dim=0

)

lstm_out=self.bi_lstm_enc(enc_sent,sent_nums)

return lstm_out

def linear_out(self,enc_out):

# enc_out=enc_out.to(device)

lin_1_out=self.lin_1(enc_out)

lin_2_out=torch.sigmoid(self.lin_2(lin_1_out))

return lin_2_out

def loss_fun(output,target,pad_idx=-1.0):

assert output.size()[:-1]==target.size()

target=target.view(output.size()).to(torch.float)

mask=target!=-1.0

masked_target=target.masked_select(mask)

masked_out=output.masked_select(mask)

loss=binary_cross_loss(masked_out,masked_target)

return loss

def save_model(model,path,step,epoch):

name='ckpt-{}-{}'.format(step,epoch)

torch.save(model.state_dict(),join(path,name))