I have used a predefined resnet101 and trained it. I am now trying to implement gradcam for which I have dissected the model to insert a hook after the last convolution layer.

‘’’

class my_resnet101(nn.Module):

def init(self):

super(my_resnet101, self).init()

self.resnet = model

# disect the network to access its last convolutional layer

self.part1 = nn.Sequential(*list(model.children())[:-2])

# get the max pool of the features stem

self.part2 = nn.Sequential(*list(model.children())[-2:])

self.gradients = None

# hook for the gradients of the activations

def activations_hook(self, grad):

self.gradients = grad

def forward(self, x):

x = self.part1(x)

# register the hook

h = x.register_hook(self.activations_hook)

# apply the remaining pooling

x = self.part2(x)

return x

# method for the gradient extraction

def get_activations_gradient(self):

return self.gradients

# method for the activation exctraction

def get_activations(self, x):

return self.features_conv(x)

‘’’

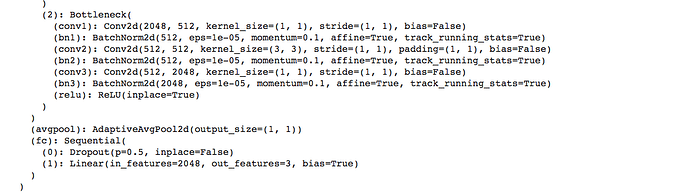

Now when I do pred = resnet(img), i get the error mat1 and mat2 shapes cannot be multiplied (2048x1 and 2048x3). The dimension of img are 1348*48. Below is a screenshot of the part of the model where I am engineering this customization. The model has been trained with images of 3 channels and so there should not be this discrepancy. Not sure what am I missing here.