I use pytorch-nightly 1.7 and nccl 2.7.6, but the problem is also exist. I cannot distributed training.

Can you help to reproduce this issue? Maybe, try to run a part of your code on Google Colab and share the link if you face the same problem again.

Ok, I will try to use Colab reproduce this issue.

I’m on NCCL 2.7.8 and also seeing this issue. The model I am training is hopelessly complex (a GAN with 3 networks and 10 losses) so it’s going to be quite a bit of work to pare it down to the point where I can share a reproduction snippet here. For now just adding some additional signal to this topic.

:edit: Also in my case, the GPU hangs at 100% utilization. It is actually very reproducible for me so if there is some debugging I can do, please LMK.

This could indicate a died process, while the other processes are waiting for it and thus spinning the wait kernels at full util.

You could check, if a process was terminated via ps auxf and also might get more information by killing the current parent process and check the stack trace.

Is there any updated to this freeze w/o error message?

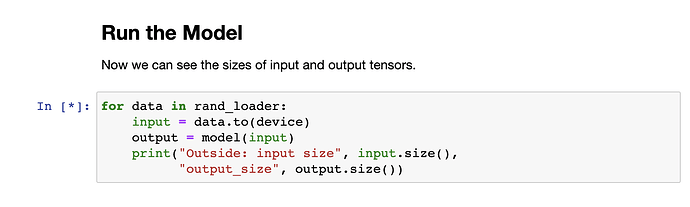

I tried to follow the example in

(Optional: Data Parallelism — PyTorch Tutorials 2.2.0+cu121 documentation) here, but I always get stuck in the “forward” function. There are no errors, but the whole process seemed to stop.

My system spec is

Ubuntu 18.04, Cuda10.1, PyTorch1.7.0 and I have 2 gpus.

There is no general root cause for a freeze to the best of my knowledge and there wasn’t a follow-up on my last post, so unsure if the issue was isolated or not.

Generally, I would recommend to try to scale down the issue e.g. by using a single worker in the DataLoader, try to run the script in a terminal etc.

I found the answer!

modify /etc/default/grub

#GRUB_CMDLINE_LINUX="" <—— Original commented

GRUB_CMDLINE_LINUX="iommu=soft" <——— Change

ref : https://github.com/pytorch/pytorch/issues/1637#issuecomment-338268158

This resolves the problem for me:

export NCCL_P2P_DISABLE=1

Thanks, really helpful.

when i was stuck in GPU=A6000 and Pytorch=1.10 with apex=0.1, this answer help me to get rid of the halting caused by apex.parallel import DistributedDataParallel.

Many thanks. This solves my problem at RTX A6000 as well

This resolved my error too. pytorch=1.12.1

See if this can help you because disabling it might not help in reducing the latency.

I am also facing a similar issue, but mine is quite concerning because neither of the above seems to fix it. I am using pytorch 2.0 with CUDA 11.7 and nccl 2.14.3. The ACS is disabled and the nccl tests all work perfectly fine. Even the cuda-samples tests like simpleP2P and simpleMultiGPU work fine. But when I launch my program it hangs after 2 or 3 hours of training with no message whatsoever. How can I even debug that? The code works perfectly fine in a non-distributed setting.

Here is my topology:

GPU0 GPU1 CPU Affinity NUMA Affinity GPU NUMA ID

GPU0 X NV4 0-27 N/A N/A

GPU1 NV4 X 0-27 N/A N/A

And here is the output of my command when run with NCCL_DEBUG=INFO:

user:36979:36979 [0] NCCL INFO Bootstrap : Using eno1:192.168.151.5<0>

user:36979:36979 [0] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

user:36979:36979 [0] NCCL INFO cudaDriverVersion 12020

NCCL version 2.14.3+cuda11.7

user:36979:37087 [0] NCCL INFO NET/IB : No device found.

user:36979:37087 [0] NCCL INFO NET/Socket : Using [0]eno1:192.168.151.5<0> [1]virbr0:192.168.122.1<0>

user:36979:37087 [0] NCCL INFO Using network Socket

user:36980:36980 [1] NCCL INFO cudaDriverVersion 12020

user:36980:36980 [1] NCCL INFO Bootstrap : Using eno1:192.168.151.5<0>

user:36980:36980 [1] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

user:36980:37091 [1] NCCL INFO NET/IB : No device found.

user:36980:37091 [1] NCCL INFO NET/Socket : Using [0]eno1:192.168.151.5<0> [1]virbr0:192.168.122.1<0>

user:36980:37091 [1] NCCL INFO Using network Socket

user:36979:37087 [0] NCCL INFO Setting affinity for GPU 0 to 0fffffff

user:36980:37091 [1] NCCL INFO Setting affinity for GPU 1 to 0fffffff

user:36979:37087 [0] NCCL INFO Channel 00/04 : 0 1

user:36979:37087 [0] NCCL INFO Channel 01/04 : 0 1

user:36979:37087 [0] NCCL INFO Channel 02/04 : 0 1

user:36980:37091 [1] NCCL INFO Trees [0] -1/-1/-1->1->0 [1] 0/-1/-1->1->-1 [2] -1/-1/-1->1->0 [3] 0/-1/-1->1->-1

user:36979:37087 [0] NCCL INFO Channel 03/04 : 0 1

user:36979:37087 [0] NCCL INFO Trees [0] 1/-1/-1->0->-1 [1] -1/-1/-1->0->1 [2] 1/-1/-1->0->-1 [3] -1/-1/-1->0->1

user:36979:37087 [0] NCCL INFO Channel 00/0 : 0[a1000] → 1[c1000] via P2P/IPC

user:36979:37087 [0] NCCL INFO Channel 01/0 : 0[a1000] → 1[c1000] via P2P/IPC

user:36980:37091 [1] NCCL INFO Channel 00/0 : 1[c1000] → 0[a1000] via P2P/IPC

user:36979:37087 [0] NCCL INFO Channel 02/0 : 0[a1000] → 1[c1000] via P2P/IPC

user:36980:37091 [1] NCCL INFO Channel 01/0 : 1[c1000] → 0[a1000] via P2P/IPC

user:36979:37087 [0] NCCL INFO Channel 03/0 : 0[a1000] → 1[c1000] via P2P/IPC

user:36980:37091 [1] NCCL INFO Channel 02/0 : 1[c1000] → 0[a1000] via P2P/IPC

user:36980:37091 [1] NCCL INFO Channel 03/0 : 1[c1000] → 0[a1000] via P2P/IPC

user:36979:37087 [0] NCCL INFO Connected all rings

user:36979:37087 [0] NCCL INFO Connected all trees

user:36979:37087 [0] NCCL INFO threadThresholds 8/8/64 | 16/8/64 | 512 | 512

user:36979:37087 [0] NCCL INFO 4 coll channels, 4 p2p channels, 4 p2p channels per peer

user:36980:37091 [1] NCCL INFO Connected all rings

user:36980:37091 [1] NCCL INFO Connected all trees

user:36980:37091 [1] NCCL INFO threadThresholds 8/8/64 | 16/8/64 | 512 | 512

user:36980:37091 [1] NCCL INFO 4 coll channels, 4 p2p channels, 4 p2p channels per peer

user:36979:37087 [0] NCCL INFO comm 0x4aa53cf0 rank 0 nranks 2 cudaDev 0 busId a1000 - Init COMPLETE

user:36980:37091 [1] NCCL INFO comm 0x6ade3ee0 rank 1 nranks 2 cudaDev 1 busId c1000 - Init COMPLETE

Any help is appreciated!

You could gdb attach to the hanging processes and check their stacktraces to narrow down the issue more assuming you’ve already used the logging env variables such as TORCH_DISTRIBUTED_DEBUG and TORCH_CPP_LOG_LEVEL and didn’t see anything concerning.