Hi, I created a model with 2 embeddings and an lstm module like below.

My input is of the shape (batch_size, window_size, feature_len), so a 3-dimension matrix.

class model (nn.Module):

def __init__(self, some parameters):

self.embed1 = nn.Embedding(len1, 10)

self.embed2 = nn.Embedding(len2, 10)

def forward(self, vec_seq):

# vec_seq.size() --> (batch_size, window_size, feature_len)

# vec_seq.type() --> torch.cuda.FloatTensor

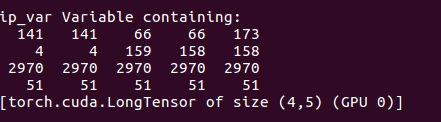

var1 = autograd.Variable(vec_seq[:,:,0].type(torch.cuda.LongTensor))

var2 = autograd.Variable(vec_seq[:,:,1].type(torch.cuda.LongTensor))

var1_emb = self.embed1(var1)

var2_emb = self.embed2(var2)

var_embed = torch.cat((var1_emb, var2_emb,

autograd.Variable(vec_seq[:,:,2].contiguous().view(batch_size, window_size, -1)),

autograd.Variable(vec_seq[:,:,3].contiguous().view(batch_size, window_size, -1)), 2)

# following some LSTM operations.

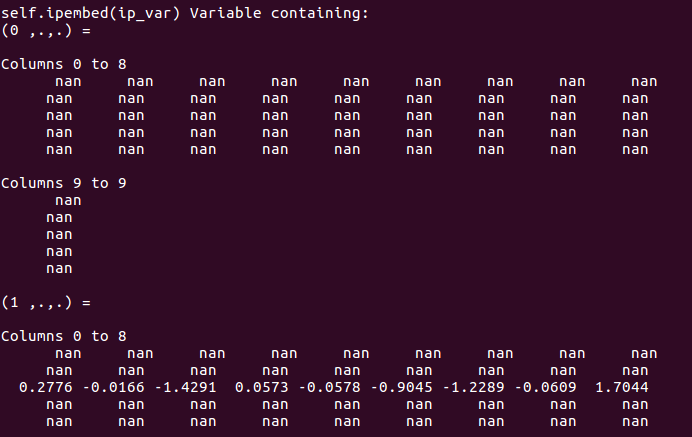

However, when I debug my program, I found all the values of var1_embed and var2_embed are nan, which is quite weird. In some cases, they are not all nan, instead part of the embedding is nan and the remaining is a float. like the image below.

The corresponding embedding is like below.

I used DataLoader to load the data with following statement.

train_dataloader = DataLoader(train_data,batch_size=opt.batch_size,shuffle=True,num_workers=opt.num_workers).

where batch_size=4 and num_workers=4.

What’s leading to this error?

Thanks.