Hello All,

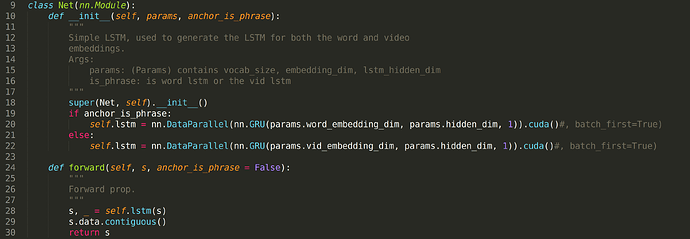

I’m trying to use DataParallel as in the following picture

but when training i get the following error (which clearly happens at line 28 in the previous picture)

s, _ = self.lstm(s)

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/parallel/data_parallel.py", line 152, in forward

outputs = self.parallel_apply(replicas, inputs, kwargs)

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/parallel/data_parallel.py", line 162, in parallel_apply

return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/parallel/parallel_apply.py", line 83, in parallel_apply

raise output

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/parallel/parallel_apply.py", line 59, in _worker

output = module(*input, **kwargs)

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/pavelameen/miniconda3/envs/TD2/lib/python3.6/site-packages/torch/nn/modules/rnn.py", line 193, in forward

max_batch_size = input.size(0) if self.batch_first else input.size(1)

AttributeError: 'tuple' object has no attribute 'size'

The type of s (which is input in the error message ) is : <class ‘torch.nn.utils.rnn.PackedSequence’> Any idea why this happens?